Federated Learning with Flexible Architectures

Paper and Code

Jun 14, 2024

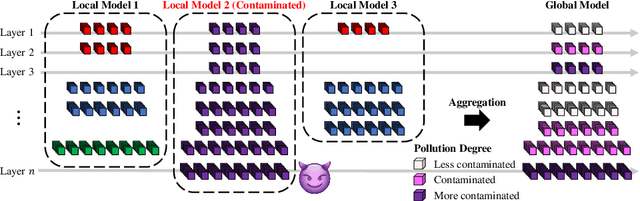

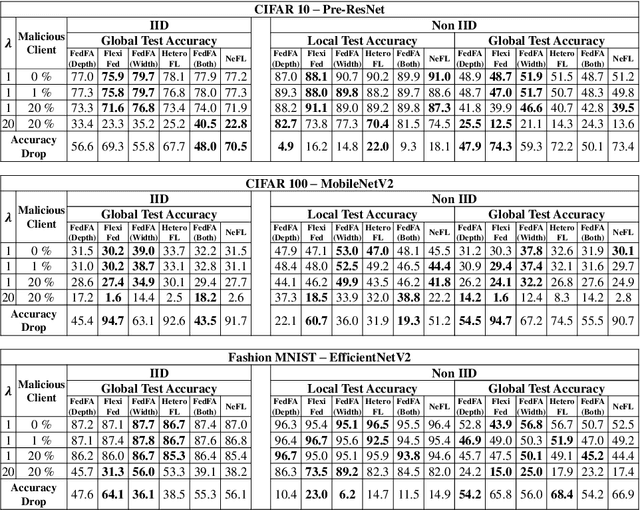

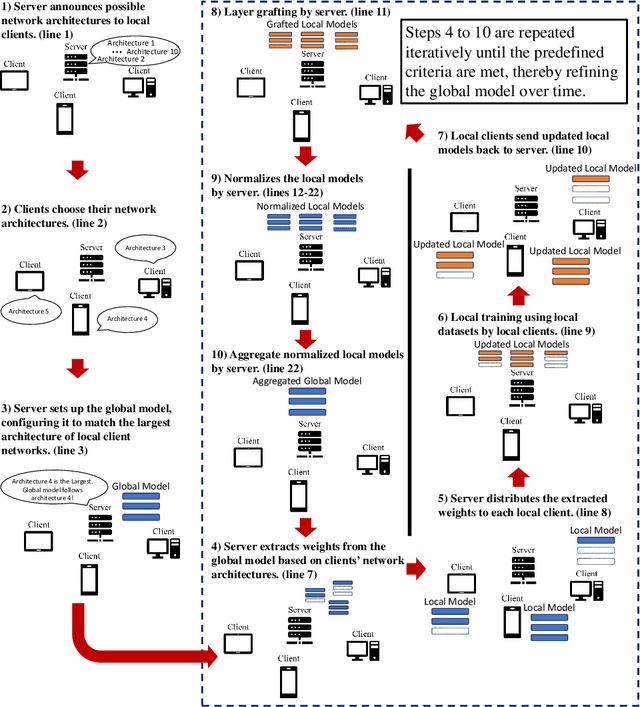

Traditional federated learning (FL) methods have limited support for clients with varying computational and communication abilities, leading to inefficiencies and potential inaccuracies in model training. This limitation hinders the widespread adoption of FL in diverse and resource-constrained environments, such as those with client devices ranging from powerful servers to mobile devices. To address this need, this paper introduces Federated Learning with Flexible Architectures (FedFA), an FL training algorithm that allows clients to train models of different widths and depths. Each client can select a network architecture suitable for its resources, with shallower and thinner networks requiring fewer computing resources for training. Unlike prior work in this area, FedFA incorporates the layer grafting technique to align clients' local architectures with the largest network architecture in the FL system during model aggregation. Layer grafting ensures that all client contributions are uniformly integrated into the global model, thereby minimizing the risk of any individual client's data skewing the model's parameters disproportionately and introducing security benefits. Moreover, FedFA introduces the scalable aggregation method to manage scale variations in weights among different network architectures. Experimentally, FedFA outperforms previous width and depth flexible aggregation strategies. Furthermore, FedFA demonstrates increased robustness against performance degradation in backdoor attack scenarios compared to earlier strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge