Feature Selection on Noisy Twitter Short Text Messages for Language Identification

Paper and Code

Jul 11, 2020

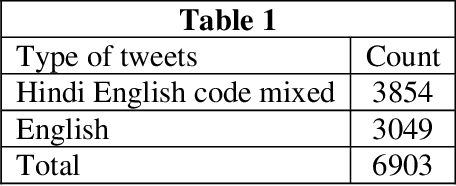

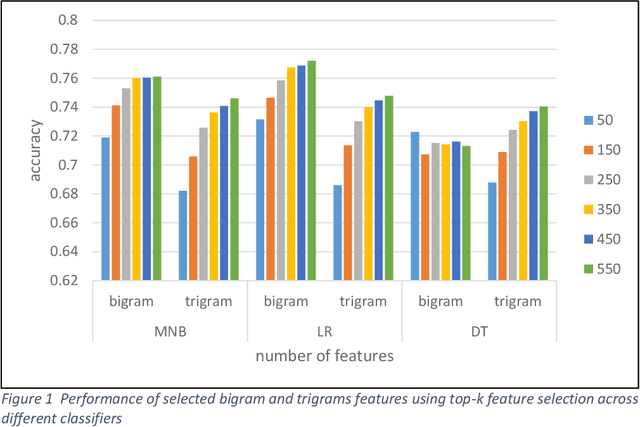

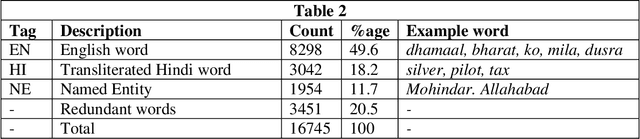

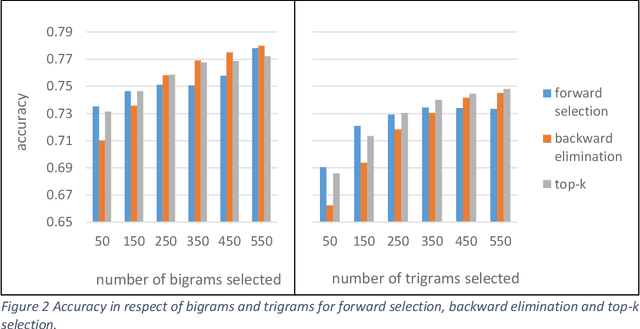

The task of written language identification involves typically the detection of the languages present in a sample of text. Moreover, a sequence of text may not belong to a single inherent language but also may be mixture of text written in multiple languages. This kind of text is generated in large volumes from social media platforms due to its flexible and user friendly environment. Such text contains very large number of features which are essential for development of statistical, probabilistic as well as other kinds of language models. The large number of features have rich as well as irrelevant and redundant features which have diverse effect over the performance of the learning model. Therefore, feature selection methods are significant in choosing feature that are most relevant for an efficient model. In this article, we basically consider the Hindi-English language identification task as Hindi and English are often two most widely spoken languages of India. We apply different feature selection algorithms across various learning algorithms in order to analyze the effect of the algorithm as well as the number of features on the performance of the task. The methodology focuses on the word level language identification using a novel dataset of 6903 tweets extracted from Twitter. Various n-gram profiles are examined with different feature selection algorithms over many classifiers. Finally, an exhaustive comparative analysis is put forward with respect to the overall experiments conducted for the task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge