Feature Density Estimation for Out-of-Distribution Detection via Normalizing Flows

Paper and Code

Feb 09, 2024

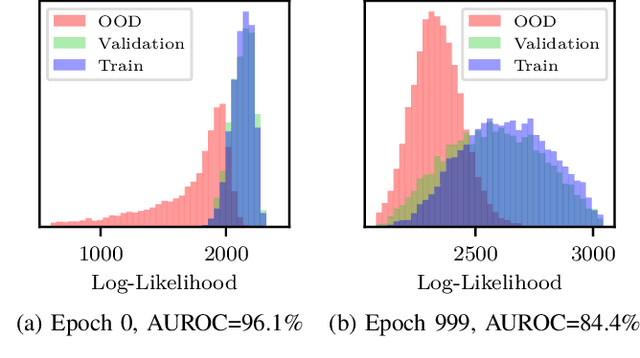

Out-of-distribution (OOD) detection is a critical task for safe deployment of learning systems in the open world setting. In this work, we investigate the use of feature density estimation via normalizing flows for OOD detection and present a fully unsupervised approach which requires no exposure to OOD data, avoiding researcher bias in OOD sample selection. This is a post-hoc method which can be applied to any pretrained model, and involves training a lightweight auxiliary normalizing flow model to perform the out-of-distribution detection via density thresholding. Experiments on OOD detection in image classification show strong results for far-OOD data detection with only a single epoch of flow training, including 98.2% AUROC for ImageNet-1k vs. Textures, which exceeds the state of the art by 7.8%. We additionally explore the connection between the feature space distribution of the pretrained model and the performance of our method. Finally, we provide insights into training pitfalls that have plagued normalizing flows for use in OOD detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge