Feature-based visual odometry prior for real-time semi-dense stereo SLAM

Paper and Code

Oct 17, 2018

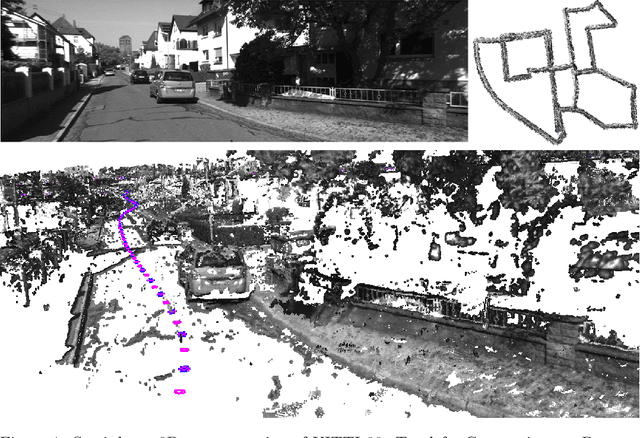

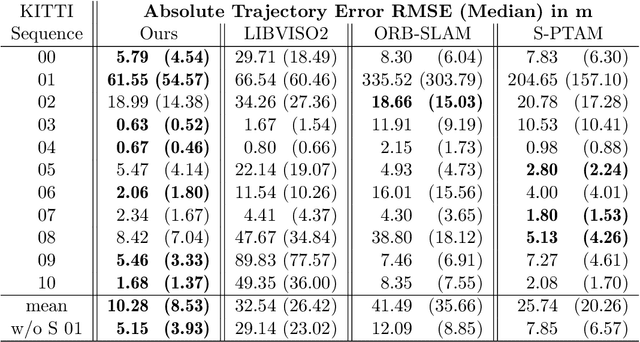

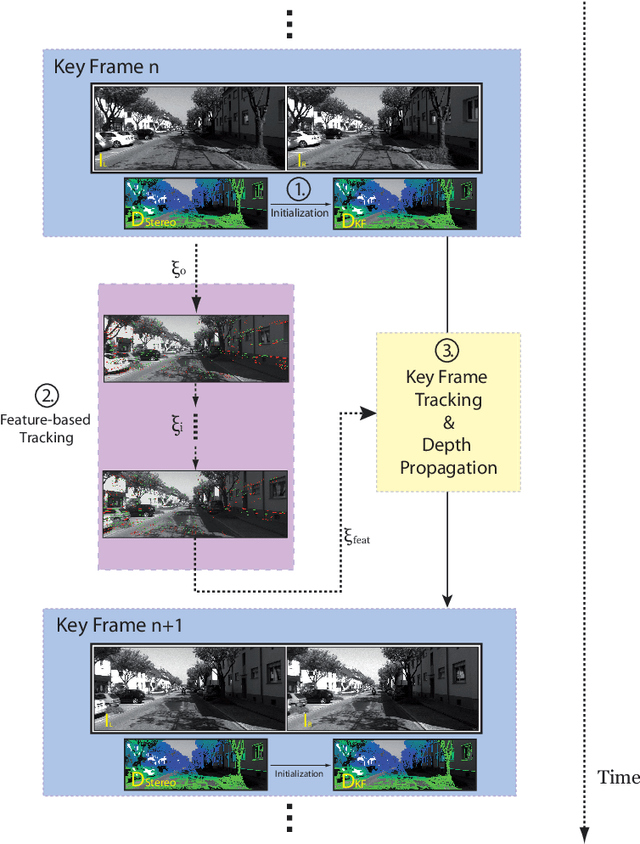

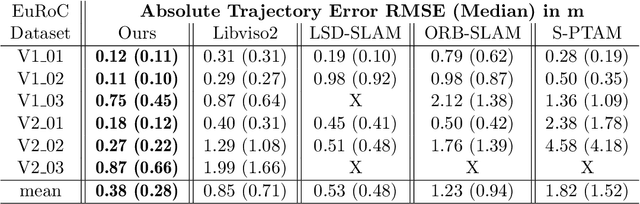

Robust and fast motion estimation and mapping is a key prerequisite for autonomous operation of mobile robots. The goal of performing this task solely on a stereo pair of video cameras is highly demanding and bears conflicting objectives: on one hand, the motion has to be tracked fast and reliably, on the other hand, high-level functions like navigation and obstacle avoidance depend crucially on a complete and accurate environment representation. In this work, we propose a two-layer approach for visual odometry and SLAM with stereo cameras that runs in real-time and combines feature-based matching with semi-dense direct image alignment. Our method initializes semi-dense depth estimation, which is computationally expensive, from motion that is tracked by a fast but robust keypoint-based method. Experiments on public benchmark and proprietary datasets show that our approach is faster than state-of-the-art methods without losing accuracy and yields comparable map building capabilities. Moreover, our approach is shown to handle large inter-frame motion and illumination changes much more robustly than its direct counterparts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge