Fast quantum learning with statistical guarantees

Paper and Code

Jan 28, 2020

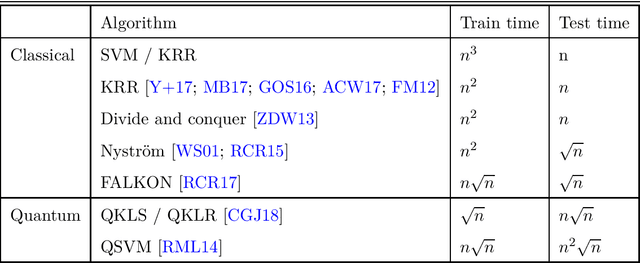

Within the framework of statistical learning theory it is possible to bound the minimum number of samples required by a learner to reach a target accuracy. We show that if the bound on the accuracy is taken into account, quantum machine learning algorithms -- for which statistical guarantees are available -- cannot achieve polylogarithmic runtimes in the input dimension. This calls for a careful revaluation of quantum speedups for learning problems, even in cases where quantum access to the data is naturally available.

* 11 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge