Fast Model-Selection through Adapting Design of Experiments Maximizing Information Gain

Paper and Code

Oct 23, 2018

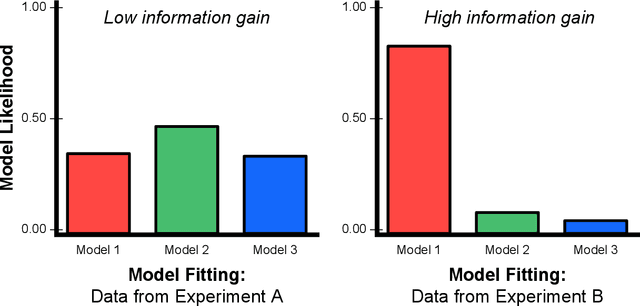

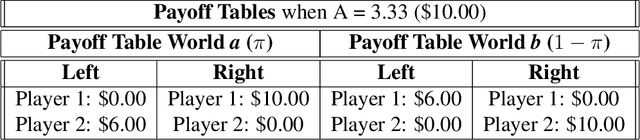

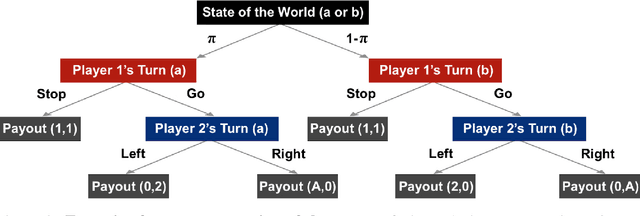

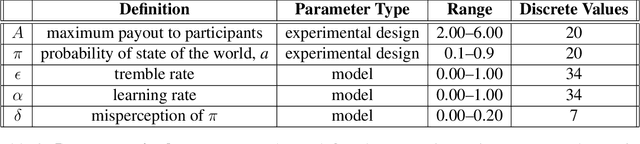

To perform model-selection efficiently, we must run informative experiments. Here, we extend a seminal method for designing Bayesian optimal experiments that maximize the information gained from data collected. We introduce two computational improvements that make the procedure tractable: a search algorithm from artificial intelligence and a sampling procedure shrinking the space of possible experiments to evaluate. We collected data for five different experimental designs of a simple imperfect information game and show that experiments optimized for information gain make model-selection possible (and cheaper). We compare the ability of the optimal experimental design to discriminate among competing models against the experimental designs chosen by a "wisdom of experts" prediction experiment. We find that a simple reinforcement learning model best explains human decision-making and that subject behavior is not adequately described by Bayesian Nash equilibrium. Our procedure is general and can be applied iteratively to lab, field and online experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge