Fast Generalized Conditional Gradient Method with Applications to Matrix Recovery Problems

Paper and Code

Feb 15, 2018

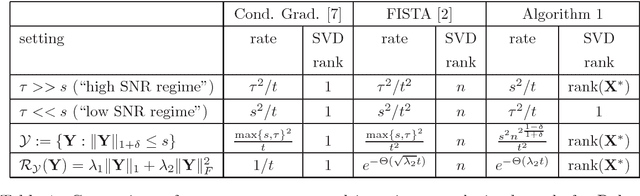

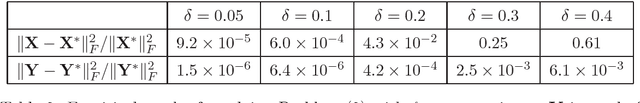

Motivated by matrix recovery problems such as Robust Principal Component Analysis, we consider a general optimization problem of minimizing a smooth and strongly convex loss applied to the sum of two blocks of variables, where each block of variables is constrained or regularized individually. We present a novel Generalized Conditional Gradient method which is able to leverage the special structure of the problem to obtain faster convergence rates than those attainable via standard methods, under a variety of interesting assumptions. In particular, our method is appealing for matrix problems in which one of the blocks corresponds to a low-rank matrix and avoiding prohibitive full-rank singular value decompositions, which are required by most standard methods, is most desirable. Importantly, while our initial motivation comes from problems which originated in statistics, our analysis does not impose any statistical assumptions on the data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge