Fast and Generalized Adaptation for Few-Shot Learning

Paper and Code

Nov 25, 2019

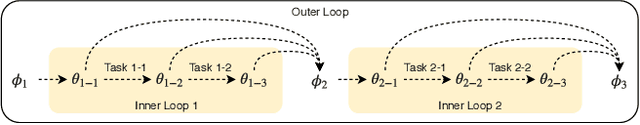

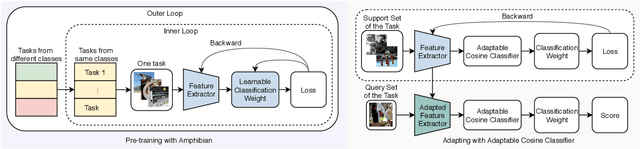

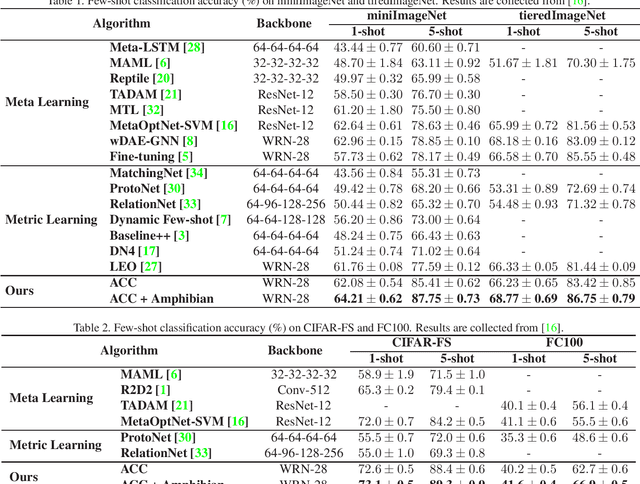

The ability of fast generalizing to novel tasks from a few examples is critical in dealing with few-shot learning problems. However, deep learning models severely suffer from overfitting in extreme low data regime. In this paper, we propose Adaptable Cosine Classifier (ACC) and Amphibian to achieve fast and generalized adaptation for few-shot learning. The ACC realizes the flexible retraining of a deep network on small data without overfitting. The Amphibian learns a good weight initialization in the parameter space where optimal solutions for the tasks of the same class cluster tightly. It enables rapid adaptation to novel tasks with few gradient updates. We conduct comprehensive experiments on four few-shot datasets and achieve state-of-the-art performance in all cases. Notably, we achieve the accuracy of 87.75% on 5-shot miniImageNet which approximately outperforms existing methods by 10%. We also conduct experiment on cross-domain few-shot tasks and provide the best results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge