Fair MP-BOOST: Fair and Interpretable Minipatch Boosting

Paper and Code

Apr 01, 2024

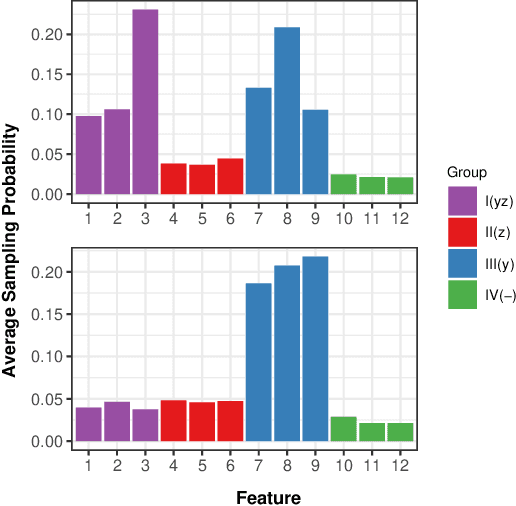

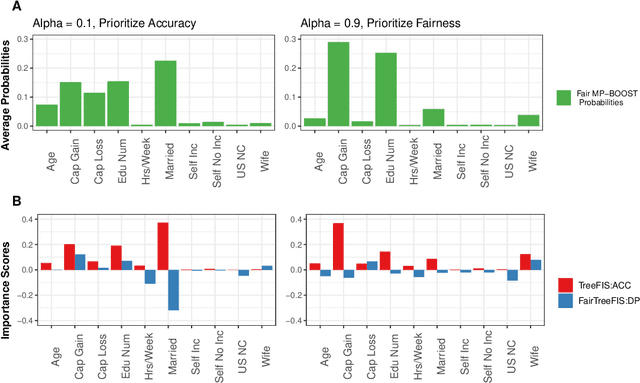

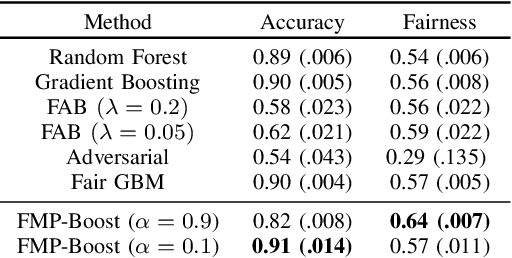

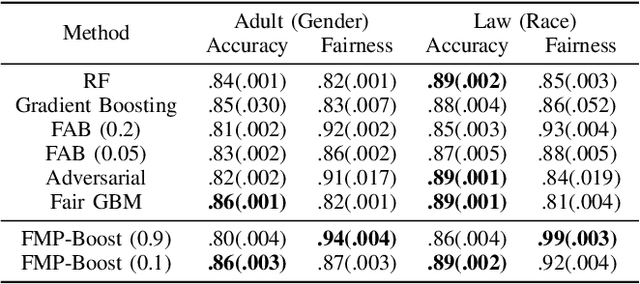

Ensemble methods, particularly boosting, have established themselves as highly effective and widely embraced machine learning techniques for tabular data. In this paper, we aim to leverage the robust predictive power of traditional boosting methods while enhancing fairness and interpretability. To achieve this, we develop Fair MP-Boost, a stochastic boosting scheme that balances fairness and accuracy by adaptively learning features and observations during training. Specifically, Fair MP-Boost sequentially samples small subsets of observations and features, termed minipatches (MP), according to adaptively learned feature and observation sampling probabilities. We devise these probabilities by combining loss functions, or by combining feature importance scores to address accuracy and fairness simultaneously. Hence, Fair MP-Boost prioritizes important and fair features along with challenging instances, to select the most relevant minipatches for learning. The learned probability distributions also yield intrinsic interpretations of feature importance and important observations in Fair MP-Boost. Through empirical evaluation of simulated and benchmark datasets, we showcase the interpretability, accuracy, and fairness of Fair MP-Boost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge