FAIR-FATE: Fair Federated Learning with Momentum

Paper and Code

Sep 27, 2022

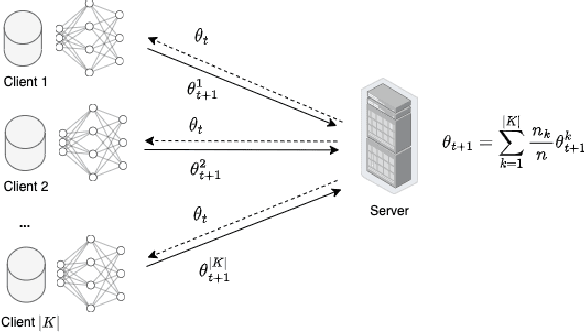

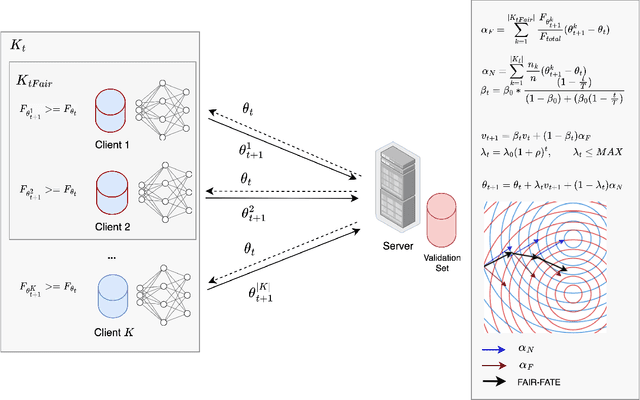

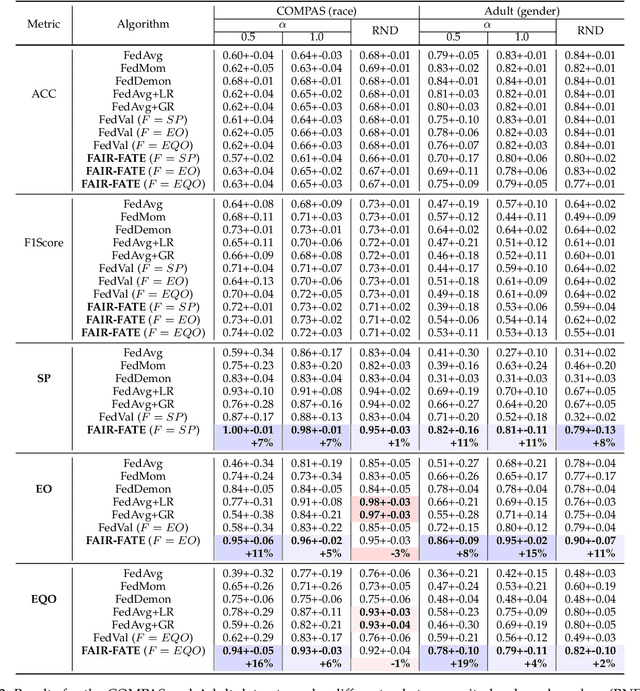

While fairness-aware machine learning algorithms have been receiving increasing attention, the focus has been on centralized machine learning, leaving decentralized methods underexplored. Federated Learning is a decentralized form of machine learning where clients train local models with a server aggregating them to obtain a shared global model. Data heterogeneity amongst clients is a common characteristic of Federated Learning, which may induce or exacerbate discrimination of unprivileged groups defined by sensitive attributes such as race or gender. In this work we propose FAIR-FATE: a novel FAIR FederATEd Learning algorithm that aims to achieve group fairness while maintaining high utility via a fairness-aware aggregation method that computes the global model by taking into account the fairness of the clients. To achieve that, the global model update is computed by estimating a fair model update using a Momentum term that helps to overcome the oscillations of noisy non-fair gradients. To the best of our knowledge, this is the first approach in machine learning that aims to achieve fairness using a fair Momentum estimate. Experimental results on four real-world datasets demonstrate that FAIR-FATE significantly outperforms state-of-the-art fair Federated Learning algorithms under different levels of data heterogeneity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge