FaceCloak: Learning to Protect Face Templates

Paper and Code

Apr 08, 2025

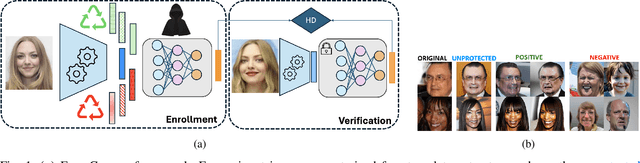

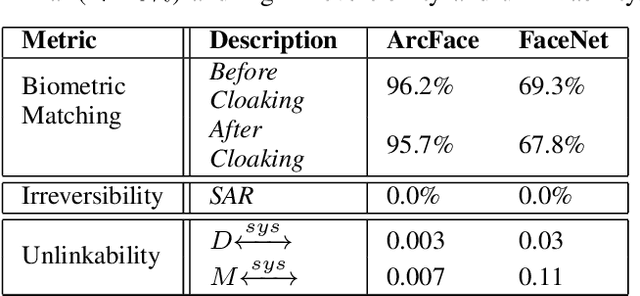

Generative models can reconstruct face images from encoded representations (templates) bearing remarkable likeness to the original face raising security and privacy concerns. We present FaceCloak, a neural network framework that protects face templates by generating smart, renewable binary cloaks. Our method proactively thwarts inversion attacks by cloaking face templates with unique disruptors synthesized from a single face template on the fly while provably retaining biometric utility and unlinkability. Our cloaked templates can suppress sensitive attributes while generalizing to novel feature extraction schemes and outperforms leading baselines in terms of biometric matching and resiliency to reconstruction attacks. FaceCloak-based matching is extremely fast (inference time cost=0.28ms) and light-weight (0.57MB).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge