Extensible Logging and Empirical Attainment Function for IOHexperimenter

Paper and Code

Sep 29, 2021

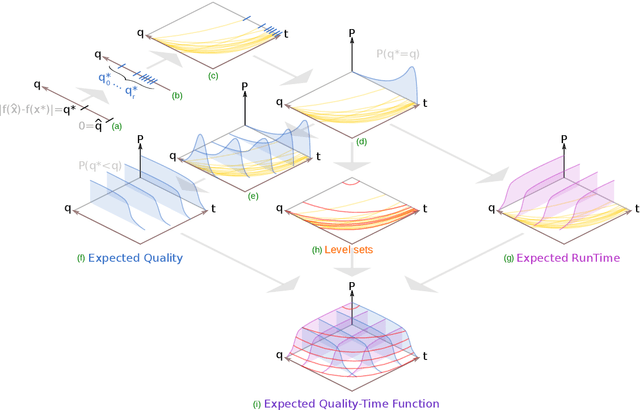

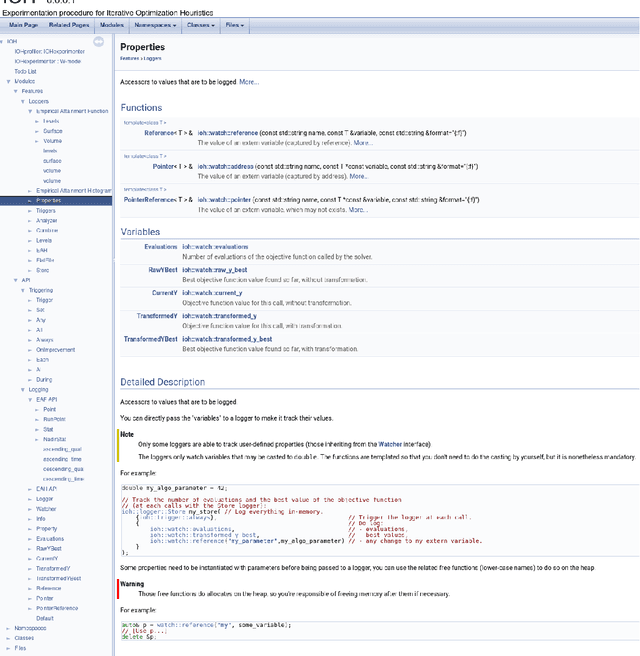

In order to allow for large-scale, landscape-aware, per-instance algorithm selection, a benchmarking platform software is key. IOHexperimenter provides a large set of synthetic problems, a logging system, and a fast implementation. In this work, we refactor IOHexperimenter's logging system, in order to make it more extensible and modular. Using this new system, we implement a new logger, which aims at computing performance metrics of an algorithm across a benchmark. The logger computes the most generic view on an anytime stochastic heuristic performances, in the form of the Empirical Attainment Function (EAF). We also provide some common statistics on the EAF and its discrete counterpart, the Empirical Attainment Histogram. Our work has eventually been merged in the IOHexperimenter codebase.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge