Exposing Deepfake with Pixel-wise AR and PPG Correlation from Faint Signals

Paper and Code

Oct 29, 2021

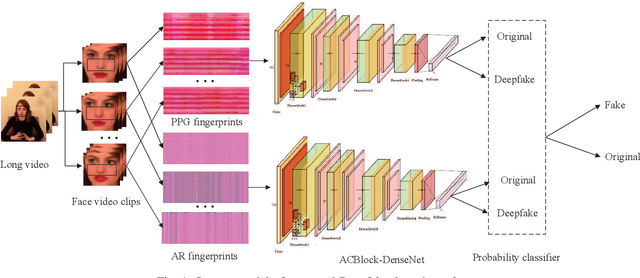

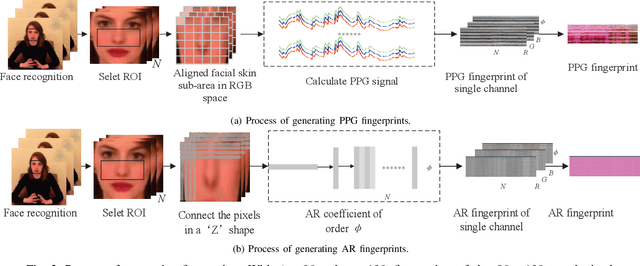

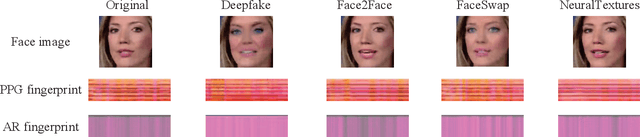

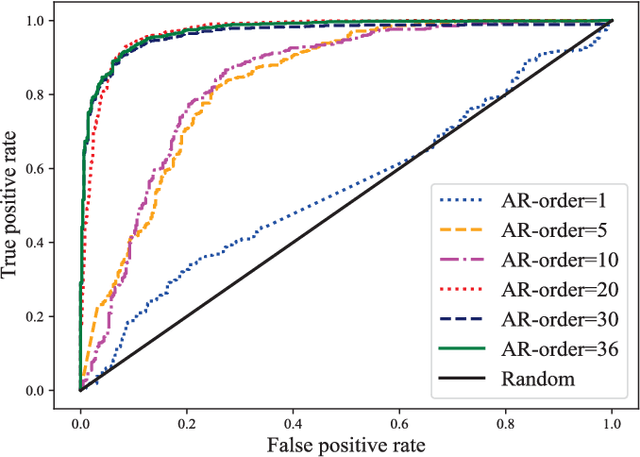

Deepfake poses a serious threat to the reliability of judicial evidence and intellectual property protection. In spite of an urgent need for Deepfake identification, existing pixel-level detection methods are increasingly unable to resist the growing realism of fake videos and lack generalization. In this paper, we propose a scheme to expose Deepfake through faint signals hidden in face videos. This scheme extracts two types of minute information hidden between face pixels-photoplethysmography (PPG) features and auto-regressive (AR) features, which are used as the basis for forensics in the temporal and spatial domains, respectively. According to the principle of PPG, tracking the absorption of light by blood cells allows remote estimation of the temporal domains heart rate (HR) of face video, and irregular HR fluctuations can be seen as traces of tampering. On the other hand, AR coefficients are able to reflect the inter-pixel correlation, and can also reflect the traces of smoothing caused by up-sampling in the process of generating fake faces. Furthermore, the scheme combines asymmetric convolution block (ACBlock)-based improved densely connected networks (DenseNets) to achieve face video authenticity forensics. Its asymmetric convolutional structure enhances the robustness of network to the input feature image upside-down and left-right flipping, so that the sequence of feature stitching does not affect detection results. Simulation results show that our proposed scheme provides more accurate authenticity detection results on multiple deep forgery datasets and has better generalization compared to the benchmark strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge