Exploring the Limits of Natural Language Inference Based Setup for Few-Shot Intent Detection

Paper and Code

Dec 14, 2021

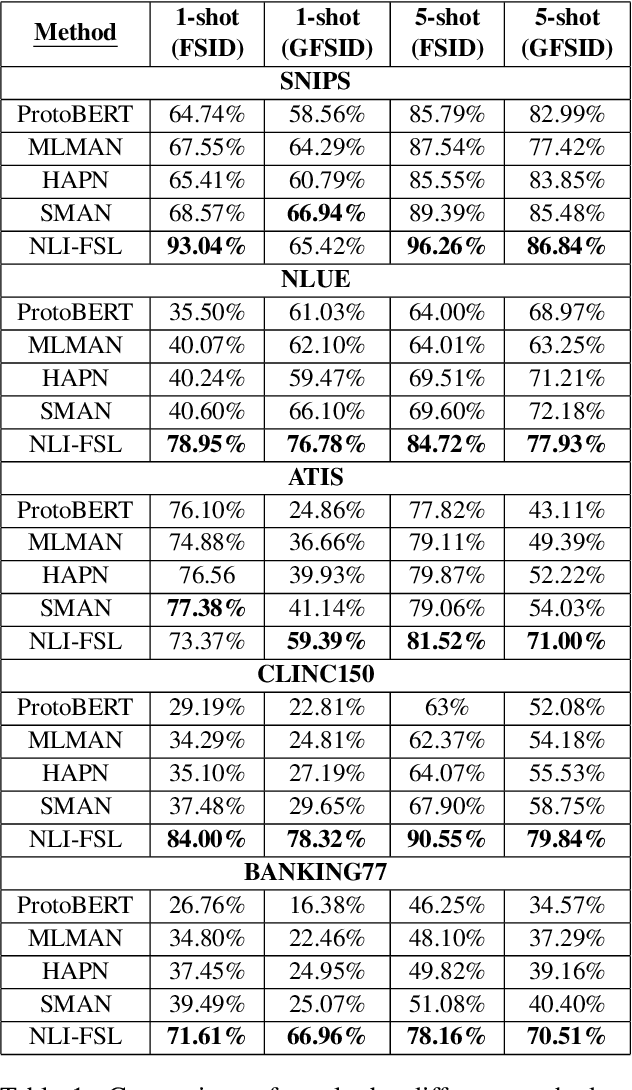

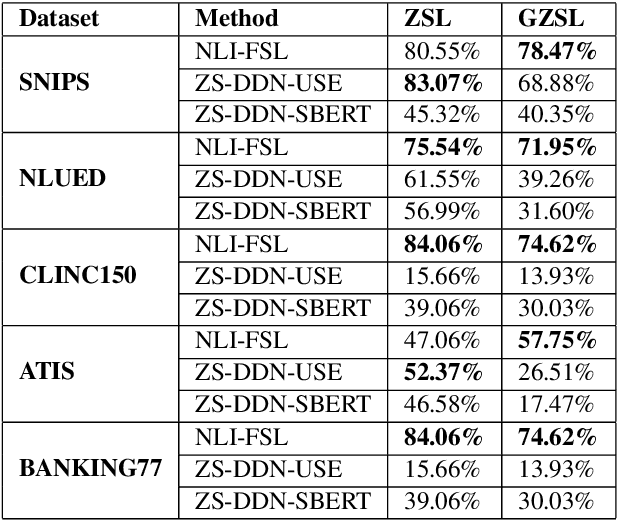

One of the core components of goal-oriented dialog systems is the task of Intent Detection. Few-shot Learning upon Intent Detection is challenging due to the scarcity of available annotated utterances. Although recent works making use of metric-based and optimization-based methods have been proposed, the task is still challenging in large label spaces and much smaller number of shots. Generalized Few-shot learning is more difficult due to the presence of both novel and seen classes during the testing phase. In this work, we propose a simple and effective method based on Natural Language Inference that not only tackles the problem of few shot intent detection, but also proves useful in zero-shot and generalized few shot learning problems. Our extensive experiments on a number of Natural Language Understanding (NLU) and Spoken Language Understanding (SLU) datasets show the effectiveness of our approach. In addition, we highlight the settings in which our NLI based method outperforms the baselines by huge margins.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge