Exploring Temporal Differences in 3D Convolutional Neural Networks

Paper and Code

Sep 07, 2019

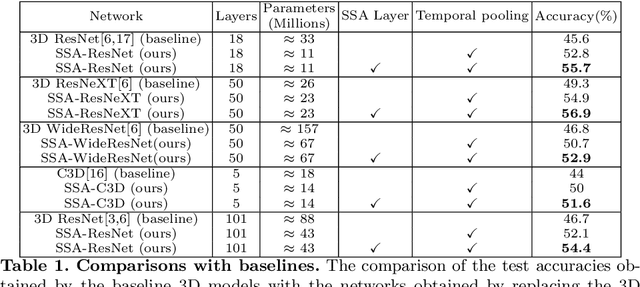

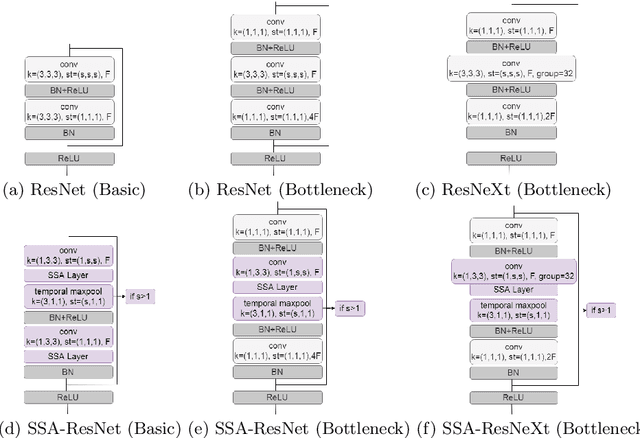

Traditional 3D convolutions are computationally expensive, memory intensive, and due to large number of parameters, they often tend to overfit. On the other hand, 2D CNNs are less computationally expensive and less memory intensive than 3D CNNs and have shown remarkable results in applications like image classification and object recognition. However, in previous works, it has been observed that they are inferior to 3D CNNs when applied on a spatio-temporal input. In this work, we propose a convolutional block which extracts the spatial information by performing a 2D convolution and extracts the temporal information by exploiting temporal differences, i.e., the change in the spatial information at different time instances, using simple operations of shift, subtract and add without utilizing any trainable parameters. The proposed convolutional block has same number of parameters as of a 2D convolution kernel of size nxn, i.e. n^2, and has n times lesser parameters than an nxnxn 3D convolution kernel. We show that the 3D CNNs perform better when the 3D convolution kernels are replaced by the proposed convolutional blocks. We evaluate the proposed convolutional block on UCF101 and ModelNet datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge