Exploiting Depth from Single Monocular Images for Object Detection and Semantic Segmentation

Paper and Code

Oct 06, 2016

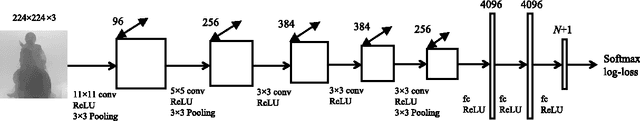

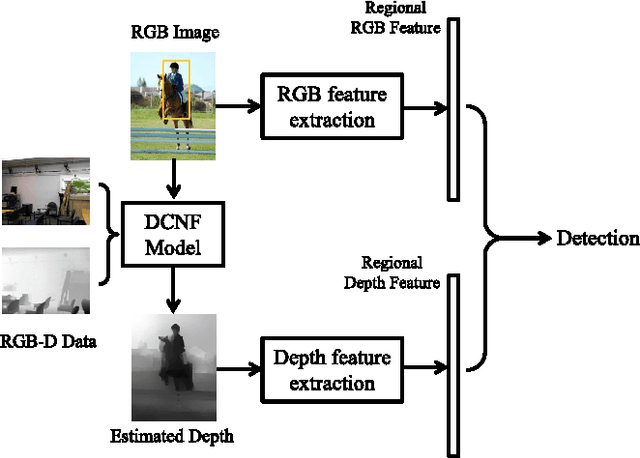

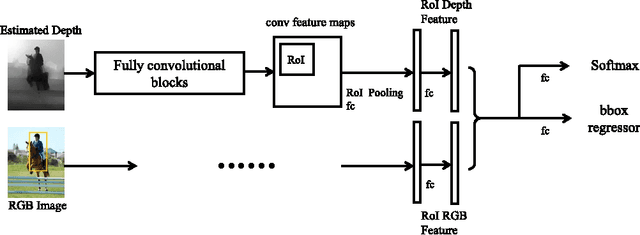

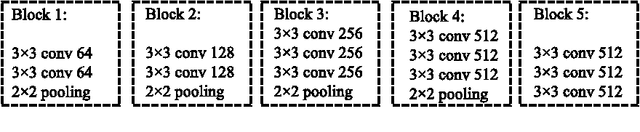

Augmenting RGB data with measured depth has been shown to improve the performance of a range of tasks in computer vision including object detection and semantic segmentation. Although depth sensors such as the Microsoft Kinect have facilitated easy acquisition of such depth information, the vast majority of images used in vision tasks do not contain depth information. In this paper, we show that augmenting RGB images with estimated depth can also improve the accuracy of both object detection and semantic segmentation. Specifically, we first exploit the recent success of depth estimation from monocular images and learn a deep depth estimation model. Then we learn deep depth features from the estimated depth and combine with RGB features for object detection and semantic segmentation. Additionally, we propose an RGB-D semantic segmentation method which applies a multi-task training scheme: semantic label prediction and depth value regression. We test our methods on several datasets and demonstrate that incorporating information from estimated depth improves the performance of object detection and semantic segmentation remarkably.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge