Explaining the (Not So) Obvious: Simple and Fast Explanation of STAN, a Next Point of Interest Recommendation System

Paper and Code

Oct 04, 2024

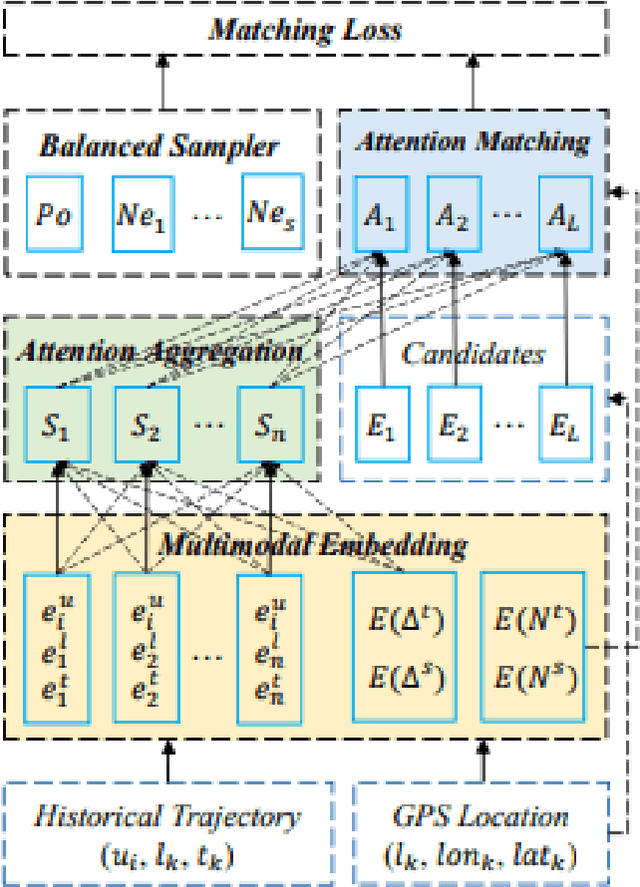

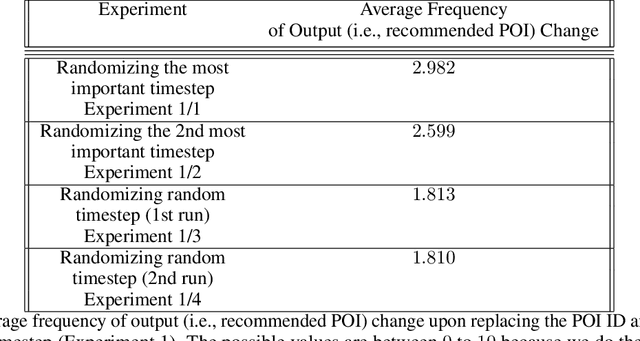

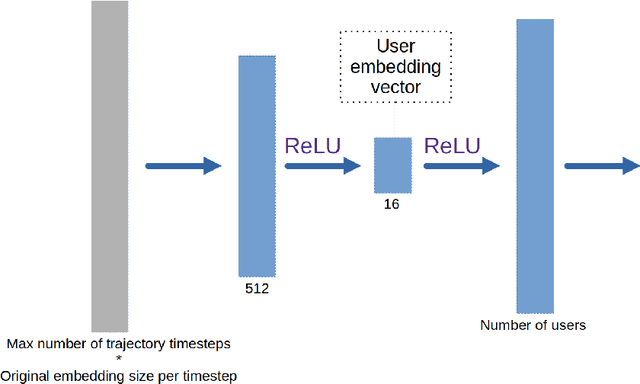

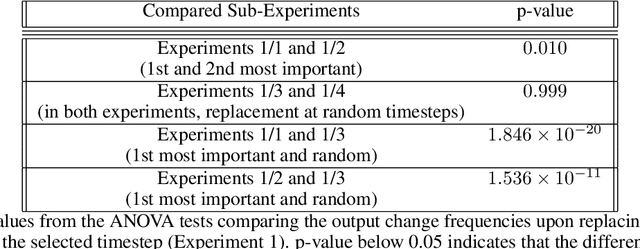

A lot of effort in recent years have been expended to explain machine learning systems. However, some machine learning methods are inherently explainable, and thus are not completely black box. This enables the developers to make sense of the output without a developing a complex and expensive explainability technique. Besides that, explainability should be tailored to suit the context of the problem. In a recommendation system which relies on collaborative filtering, the recommendation is based on the behaviors of similar users, therefore the explanation should tell which other users are similar to the current user. Similarly, if the recommendation system is based on sequence prediction, the explanation should also tell which input timesteps are the most influential. We demonstrate this philosophy/paradigm in STAN (Spatio-Temporal Attention Network for Next Location Recommendation), a next Point of Interest recommendation system based on collaborative filtering and sequence prediction. We also show that the explanation helps to "debug" the output.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge