Explaining Object Detectors via Collective Contribution of Pixels

Paper and Code

Dec 01, 2024

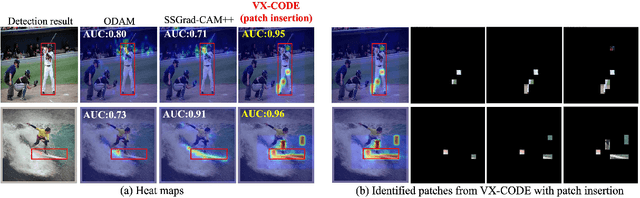

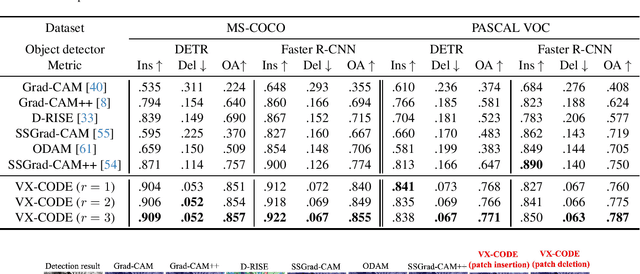

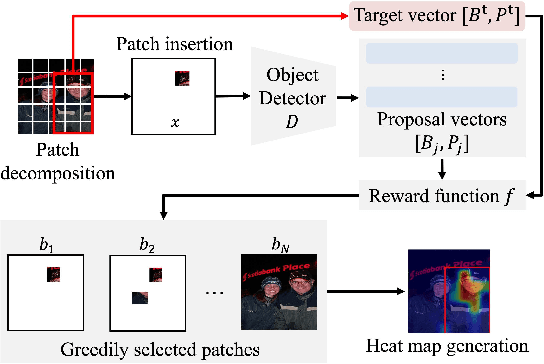

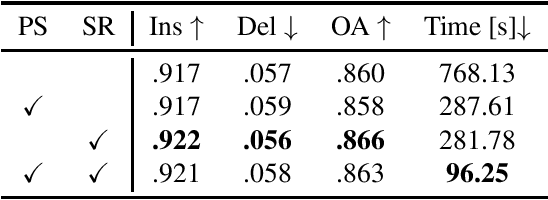

Visual explanations for object detectors are crucial for enhancing their reliability. Since object detectors identify and localize instances by assessing multiple features collectively, generating explanations that capture these collective contributions is critical. However, existing methods focus solely on individual pixel contributions, ignoring the collective contribution of multiple pixels. To address this, we proposed a method for object detectors that considers the collective contribution of multiple pixels. Our approach leverages game-theoretic concepts, specifically Shapley values and interactions, to provide explanations. These explanations cover both bounding box generation and class determination, considering both individual and collective pixel contributions. Extensive quantitative and qualitative experiments demonstrate that the proposed method more accurately identifies important regions in detection results compared to current state-of-the-art methods. The code will be publicly available soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge