Explainable Matrix -- Visualization for Global and Local Interpretability of Random Forest Classification Ensembles

Paper and Code

May 08, 2020

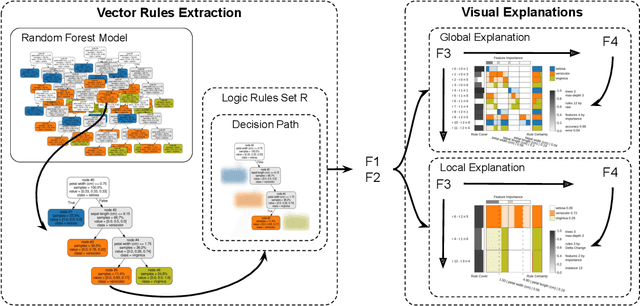

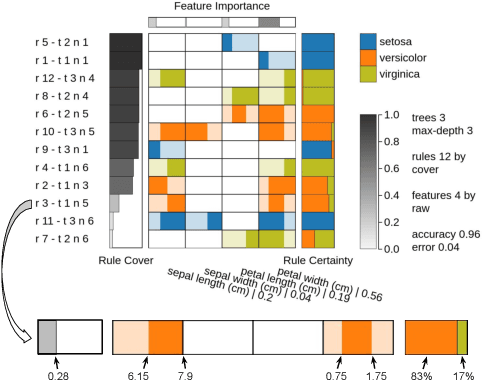

Over the past decades, classification models have proven to be one of the essential machine learning tools given their potential and applicability in various domains. In these years, the north of the majority of the researchers had been to improve quality metrics, notwithstanding the lack of information about models' decisions such metrics convey. Recently, this paradigm has shifted, and strategies that go beyond tables and numbers to assist in interpreting models' decisions are increasing in importance. Part of this trend, visualization techniques have been extensively used to support the interpretability of classification models, with a significant focus on rule-based techniques. Despite the advances, the existing visualization approaches present limitations in terms of visual scalability, and large and complex models, such as the ones produced by the Random Forest (RF) technique, cannot be entirely visualized without losing context. In this paper, we propose Explainable Matrix (ExMatrix), a novel visualization method for RF interpretability that can handle models with massive quantities of rules. It employs a simple yet powerful matrix-like visual metaphor, where rows are rules, columns are features, and cells are rules predicates, enabling the analysis of entire models and auditing classification results. ExMatrix applicability is confirmed via different usage scenarios, showing how it can be used in practice to increase trust in the classification models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge