Explainable AI Enabled Inspection of Business Process Prediction Models

Paper and Code

Jul 16, 2021

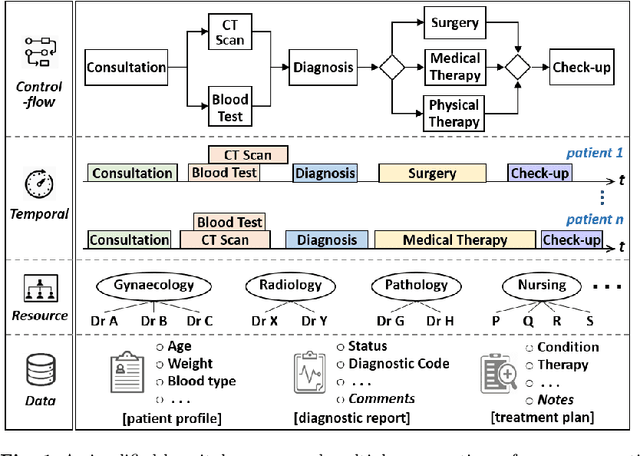

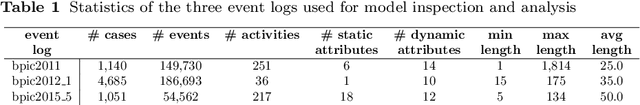

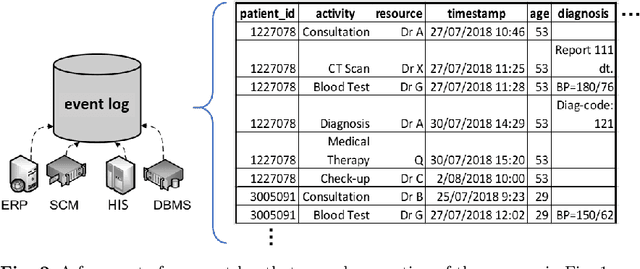

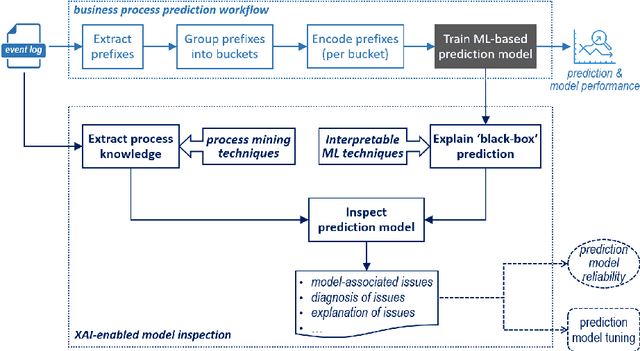

Modern data analytics underpinned by machine learning techniques has become a key enabler to the automation of data-led decision making. As an important branch of state-of-the-art data analytics, business process predictions are also faced with a challenge in regard to the lack of explanation to the reasoning and decision by the underlying `black-box' prediction models. With the development of interpretable machine learning techniques, explanations can be generated for a black-box model, making it possible for (human) users to access the reasoning behind machine learned predictions. In this paper, we aim to present an approach that allows us to use model explanations to investigate certain reasoning applied by machine learned predictions and detect potential issues with the underlying methods thus enhancing trust in business process prediction models. A novel contribution of our approach is the proposal of model inspection that leverages both the explanations generated by interpretable machine learning mechanisms and the contextual or domain knowledge extracted from event logs that record historical process execution. Findings drawn from this work are expected to serve as a key input to developing model reliability metrics and evaluation in the context of business process predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge