Experiments with LVT and FRE for Transformer model

Paper and Code

Apr 26, 2020

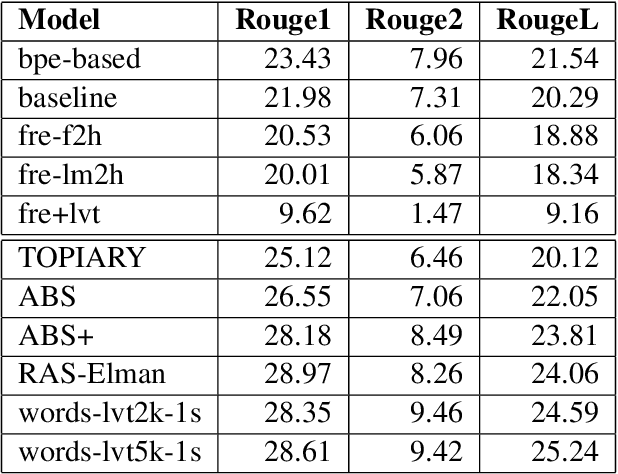

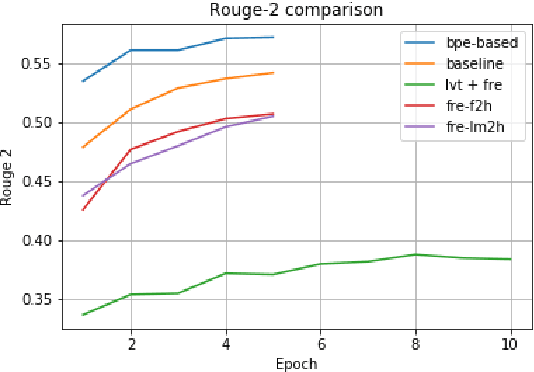

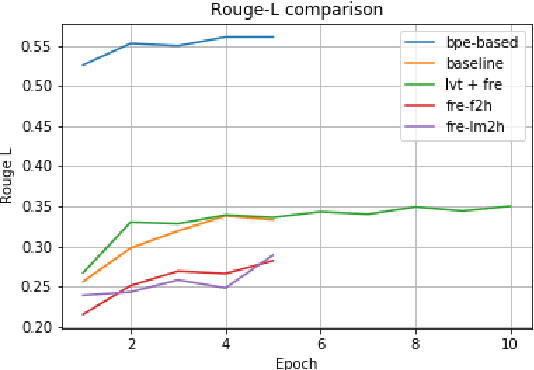

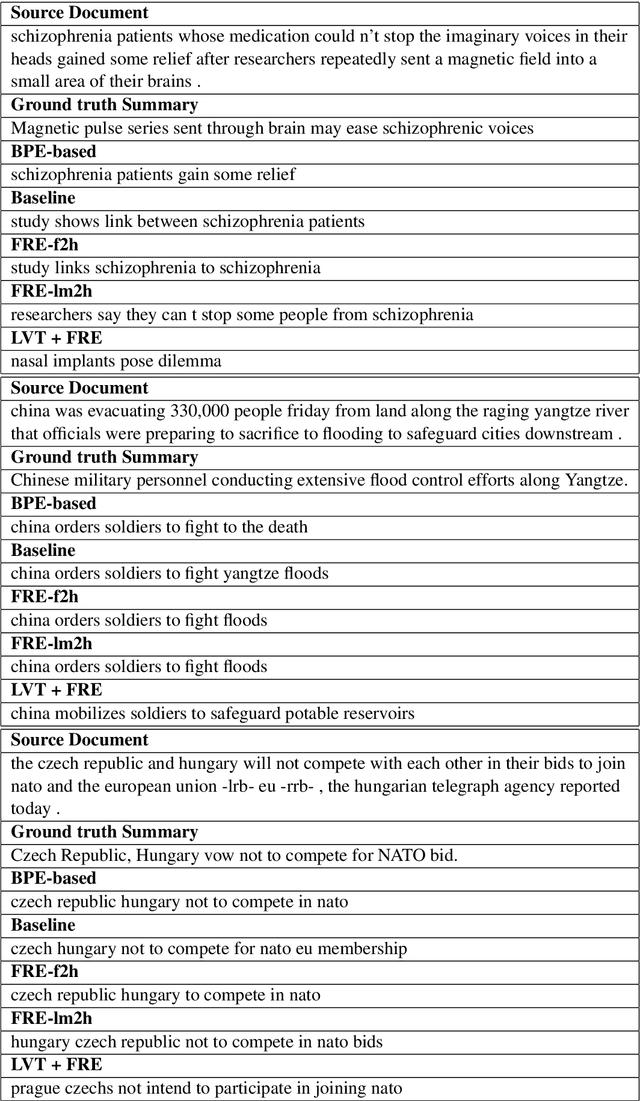

In this paper, we experiment with Large Vocabulary Trick and Feature-rich encoding applied to the Transformer model for Text Summarization. We could not achieve better results, than the analogous RNN-based sequence-to-sequence model, so we tried more models to find out, what improves the results and what deteriorates them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge