Exactly Computing the Local Lipschitz Constant of ReLU Networks

Paper and Code

Mar 02, 2020

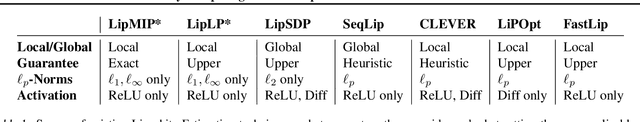

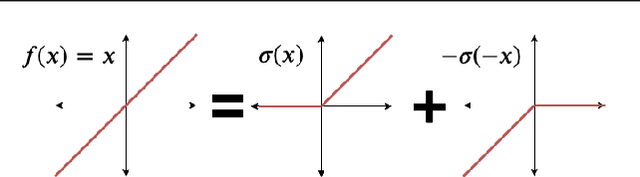

The Lipschitz constant of a neural network is a useful metric for provable robustness and generalization. We present a novel analytic result which relates gradient norms to Lipschitz constants for nondifferentiable functions. Next we prove hardness and inapproximability results for computing the local Lipschitz constant of ReLU neural networks. We develop a mixed-integer programming formulation to exactly compute the local Lipschitz constant for scalar and vector-valued networks. Finally, we apply our technique on networks trained on synthetic datasets and MNIST, drawing observations about the tightness of competing Lipschitz estimators and the effects of regularized training on Lipschitz constants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge