Evolving Architectures with Gradient Misalignment toward Low Adversarial Transferability

Paper and Code

Sep 13, 2021

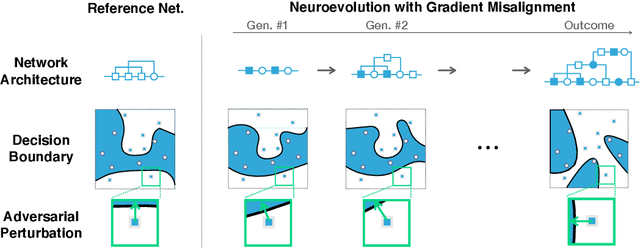

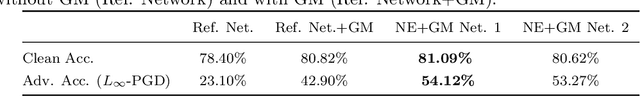

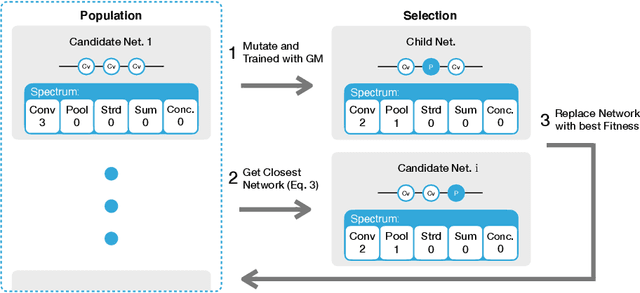

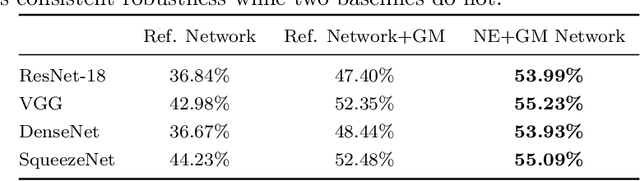

Deep neural network image classifiers are known to be susceptible not only to adversarial examples created for them but even those created for others. This phenomenon poses a potential security risk in various black-box systems relying on image classifiers. The reason behind such transferability of adversarial examples is not yet fully understood and many studies have proposed training methods to obtain classifiers with low transferability. In this study, we address this problem from a novel perspective through investigating the contribution of the network architecture to transferability. Specifically, we propose an architecture searching framework that employs neuroevolution to evolve network architectures and the gradient misalignment loss to encourage networks to converge into dissimilar functions after training. Our experiments show that the proposed framework successfully discovers architectures that reduce transferability from four standard networks including ResNet and VGG, while maintaining a good accuracy on unperturbed images. In addition, the evolved networks trained with gradient misalignment exhibit significantly lower transferability compared to standard networks trained with gradient misalignment, which indicates that the network architecture plays an important role in reducing transferability. This study demonstrates that designing or exploring proper network architectures is a promising approach to tackle the transferability issue and train adversarially robust image classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge