Evaluating the impact of an explainable machine learning system on the interobserver agreement in chest radiograph interpretation

Paper and Code

Apr 01, 2023

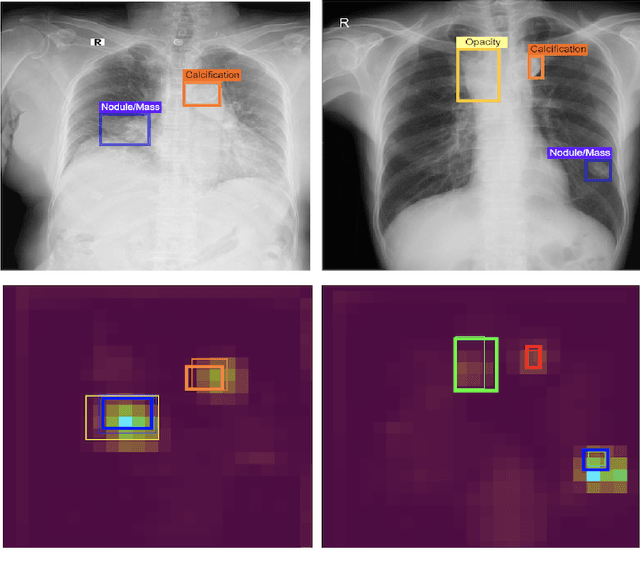

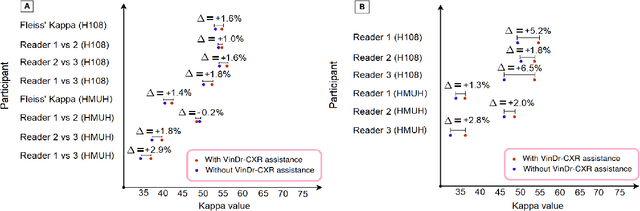

We conducted a prospective study to measure the clinical impact of an explainable machine learning system on interobserver agreement in chest radiograph interpretation. The AI system, which we call as it VinDr-CXR when used as a diagnosis-supporting tool, significantly improved the agreement between six radiologists with an increase of 1.5% in mean Fleiss' Kappa. In addition, we also observed that, after the radiologists consulted AI's suggestions, the agreement between each radiologist and the system was remarkably increased by 3.3% in mean Cohen's Kappa. This work has been accepted for publication in IEEE Access and this paper is our short version submitted to the Midwest Machine Learning Symposium (MMLS 2023), Chicago, IL, USA.

* This work has been accepted for publication in IEEE Access. This is a

short version submitted to the Midwest Machine Learning Symposium (MMLS

2023), Chicago, IL, USA

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge