Estimate Exchange over Network is Good for Distributed Hard Thresholding Pursuit

Paper and Code

Sep 22, 2017

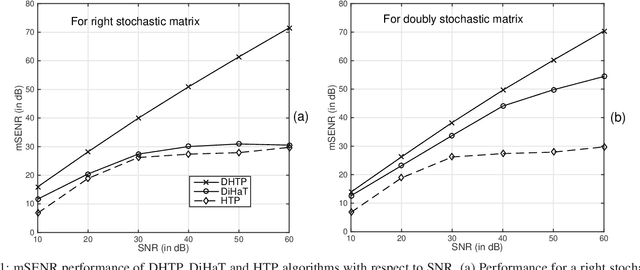

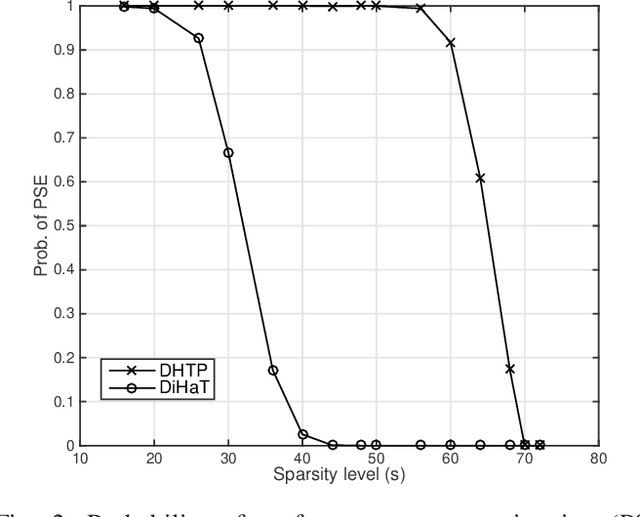

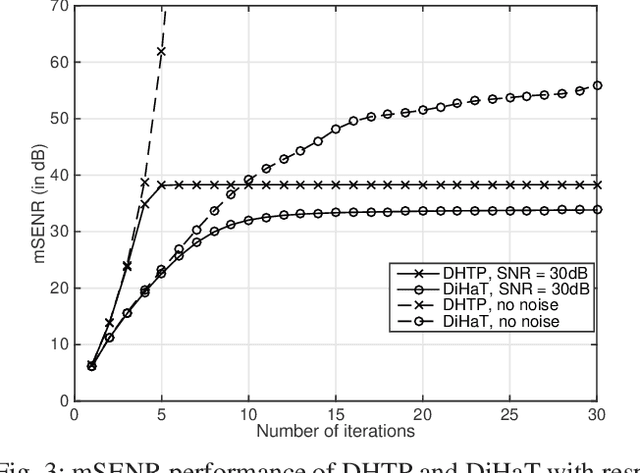

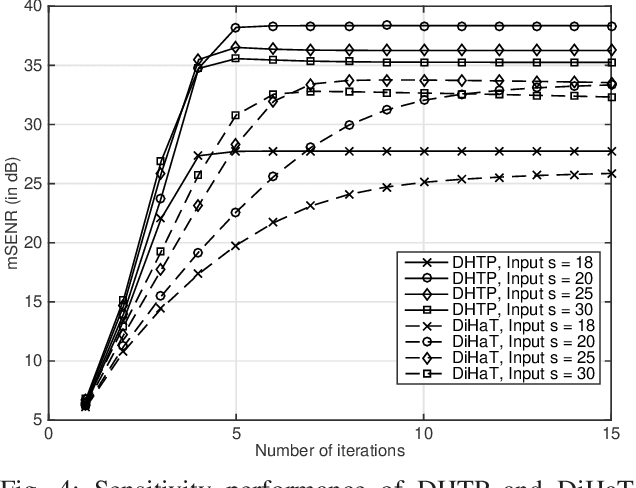

We investigate an existing distributed algorithm for learning sparse signals or data over networks. The algorithm is iterative and exchanges intermediate estimates of a sparse signal over a network. This learning strategy using exchange of intermediate estimates over the network requires a limited communication overhead for information transmission. Our objective in this article is to show that the strategy is good for learning in spite of limited communication. In pursuit of this objective, we first provide a restricted isometry property (RIP)-based theoretical analysis on convergence of the iterative algorithm. Then, using simulations, we show that the algorithm provides competitive performance in learning sparse signals vis-a-vis an existing alternate distributed algorithm. The alternate distributed algorithm exchanges more information including observations and system parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge