Essential Features: Reducing the Attack Surface of Adversarial Perturbations with Robust Content-Aware Image Preprocessing

Paper and Code

Dec 03, 2020

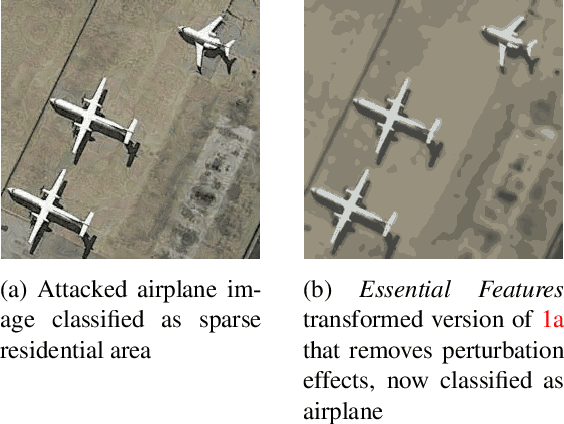

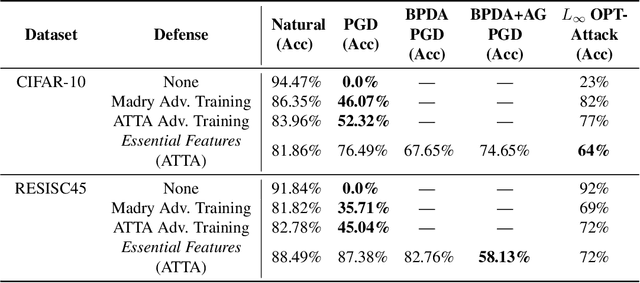

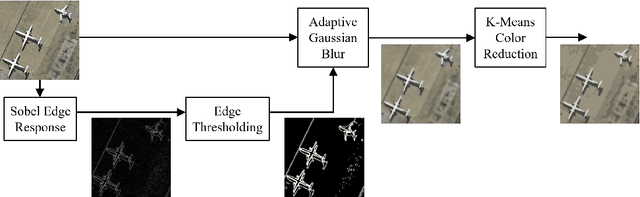

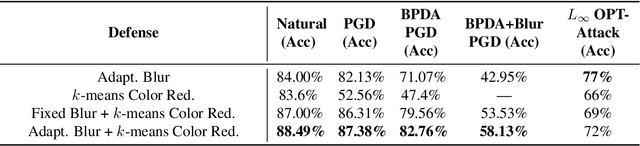

Adversaries are capable of adding perturbations to an image to fool machine learning models into incorrect predictions. One approach to defending against such perturbations is to apply image preprocessing functions to remove the effects of the perturbation. Existing approaches tend to be designed orthogonally to the content of the image and can be beaten by adaptive attacks. We propose a novel image preprocessing technique called Essential Features that transforms the image into a robust feature space that preserves the main content of the image while significantly reducing the effects of the perturbations. Specifically, an adaptive blurring strategy that preserves the main edge features of the original object along with a k-means color reduction approach is employed to simplify the image to its k most representative colors. This approach significantly limits the attack surface for adversaries by limiting the ability to adjust colors while preserving pertinent features of the original image. We additionally design several adaptive attacks and find that our approach remains more robust than previous baselines. On CIFAR-10 we achieve 64% robustness and 58.13% robustness on RESISC45, raising robustness by over 10% versus state-of-the-art adversarial training techniques against adaptive white-box and black-box attacks. The results suggest that strategies that retain essential features in images by adaptive processing of the content hold promise as a complement to adversarial training for boosting robustness against adversarial inputs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge