ESFPNet: efficient deep learning architecture for real-time lesion segmentation in autofluorescence bronchoscopic video

Paper and Code

Jul 15, 2022

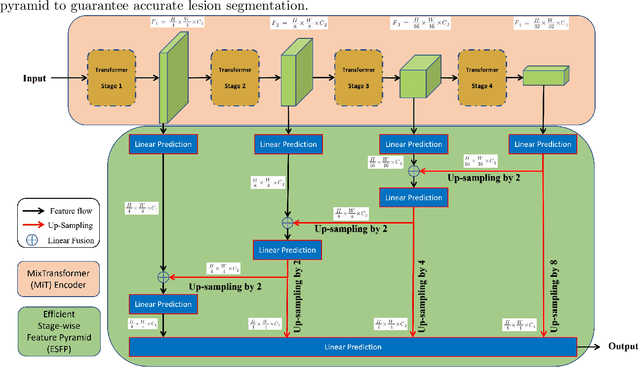

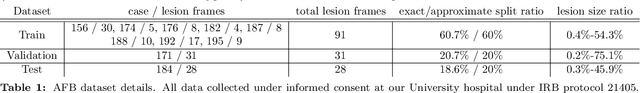

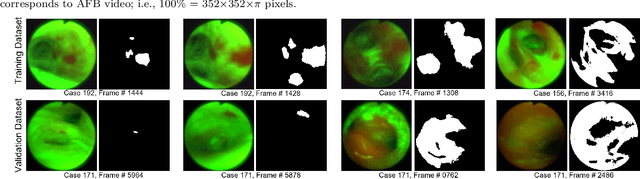

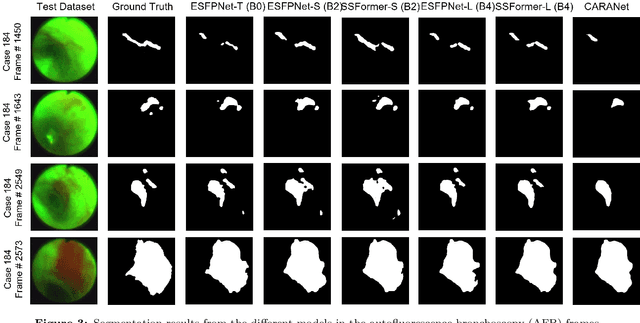

Lung cancer tends to be detected at an advanced stage, resulting in a high patient mortality rate. Thus, recent research has focused on early disease detection. Lung cancer generally first appears as lesions developing within the bronchial epithelium of the airway walls. Bronchoscopy is the procedure of choice for effective noninvasive bronchial lesion detection. In particular, autofluorescence bronchoscopy (AFB) discriminates the autofluorescence properties of normal and diseased tissue, whereby lesions appear reddish brown in AFB video frames, while normal tissue appears green. Because recent studies show AFB's ability for high lesion sensitivity, it has become a potentially pivotal method during the standard bronchoscopic airway exam for early-stage lung cancer detection. Unfortunately, manual inspection of AFB video is extremely tedious and error-prone, while limited effort has been expended toward potentially more robust automatic AFB lesion detection and segmentation. We propose a real-time deep learning architecture ESFPNet for robust detection and segmentation of bronchial lesions from an AFB video stream. The architecture features an encoder structure that exploits pretrained Mix Transformer (MiT) encoders and a stage-wise feature pyramid (ESFP) decoder structure. Results from AFB videos derived from lung cancer patient airway exams indicate that our approach gives mean Dice index and IOU values of 0.782 and 0.658, respectively, while having a processing throughput of 27 frames/sec. These values are superior to results achieved by other competing architectures that use Mix transformers or CNN-based encoders. Moreover, the superior performance on the ETIS-LaribPolypDB dataset demonstrates its potential applicability to other domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge