Error-Resilient Machine Learning in Near Threshold Voltage via Classifier Ensemble

Paper and Code

Jul 03, 2016

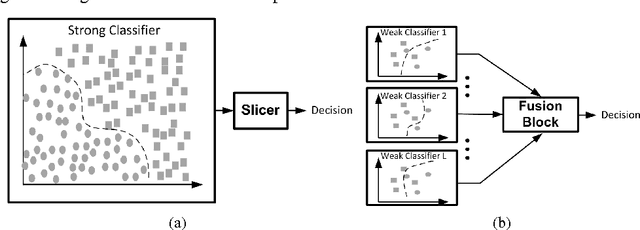

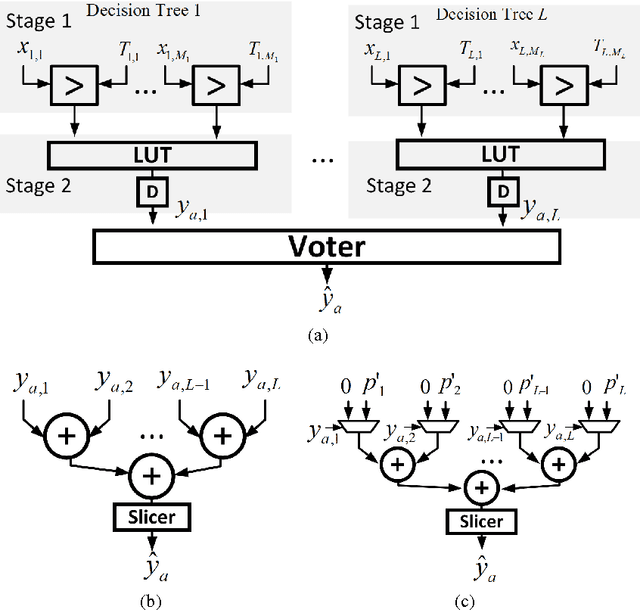

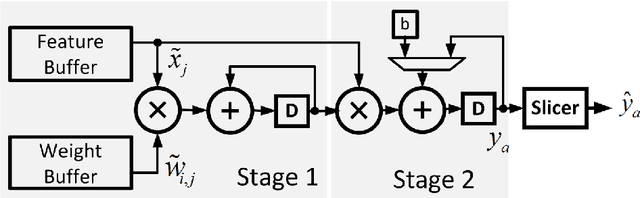

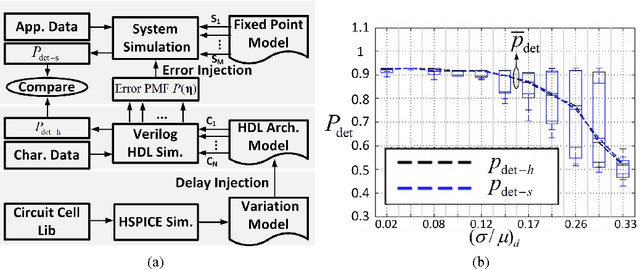

In this paper, we present the design of error-resilient machine learning architectures by employing a distributed machine learning framework referred to as classifier ensemble (CE). CE combines several simple classifiers to obtain a strong one. In contrast, centralized machine learning employs a single complex block. We compare the random forest (RF) and the support vector machine (SVM), which are representative techniques from the CE and centralized frameworks, respectively. Employing the dataset from UCI machine learning repository and architectural-level error models in a commercial 45 nm CMOS process, it is demonstrated that RF-based architectures are significantly more robust than SVM architectures in presence of timing errors due to process variations in near-threshold voltage (NTV) regions (0.3 V - 0.7 V). In particular, the RF architecture exhibits a detection accuracy (P_{det}) that varies by 3.2% while maintaining a median P_{det} > 0.9 at a gate level delay variation of 28.9% . In comparison, SVM exhibits a P_{det} that varies by 16.8%. Additionally, we propose an error weighted voting technique that incorporates the timing error statistics of the NTV circuit fabric to further enhance robustness. Simulation results confirm that the error weighted voting achieves a P_{det} that varies by only 1.4%, which is 12X lower compared to SVM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge