Error Estimation for Random Fourier Features

Paper and Code

Feb 22, 2023

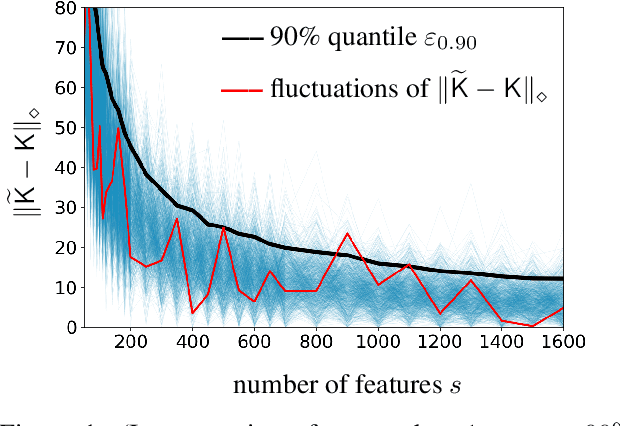

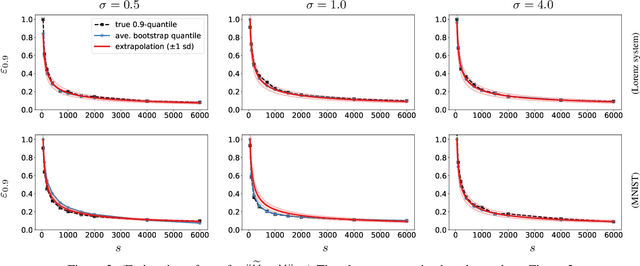

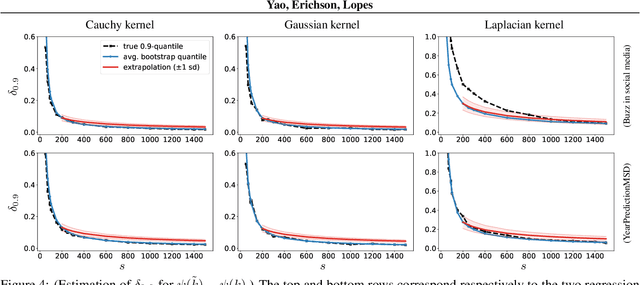

Random Fourier Features (RFF) is among the most popular and broadly applicable approaches for scaling up kernel methods. In essence, RFF allows the user to avoid costly computations on a large kernel matrix via a fast randomized approximation. However, a pervasive difficulty in applying RFF is that the user does not know the actual error of the approximation, or how this error will propagate into downstream learning tasks. Up to now, the RFF literature has primarily dealt with these uncertainties using theoretical error bounds, but from a user's standpoint, such results are typically impractical -- either because they are highly conservative or involve unknown quantities. To tackle these general issues in a data-driven way, this paper develops a bootstrap approach to numerically estimate the errors of RFF approximations. Three key advantages of this approach are: (1) The error estimates are specific to the problem at hand, avoiding the pessimism of worst-case bounds. (2) The approach is flexible with respect to different uses of RFF, and can even estimate errors in downstream learning tasks. (3) The approach enables adaptive computation, so that the user can quickly inspect the error of a rough initial kernel approximation and then predict how much extra work is needed. Lastly, in exchange for all of these benefits, the error estimates can be obtained at a modest computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge