Equivariant Neural Network for Factor Graphs

Paper and Code

Sep 29, 2021

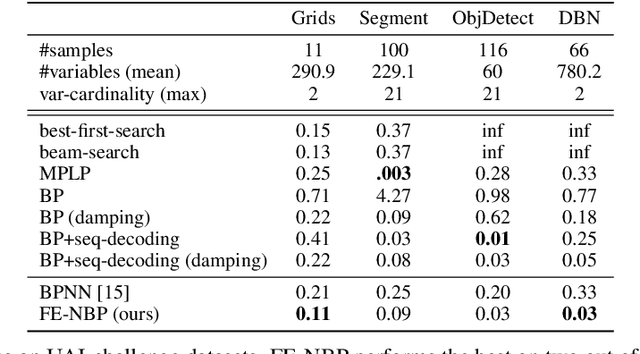

Several indices used in a factor graph data structure can be permuted without changing the underlying probability distribution. An algorithm that performs inference on a factor graph should ideally be equivariant or invariant to permutations of global indices of nodes, variable orderings within a factor, and variable assignment orderings. However, existing neural network-based inference procedures fail to take advantage of this inductive bias. In this paper, we precisely characterize these isomorphic properties of factor graphs and propose two inference models: Factor-Equivariant Neural Belief Propagation (FE-NBP) and Factor-Equivariant Graph Neural Networks (FE-GNN). FE-NBP is a neural network that generalizes BP and respects each of the above properties of factor graphs while FE-GNN is an expressive GNN model that relaxes an isomorphic property in favor of greater expressivity. Empirically, we demonstrate on both real-world and synthetic datasets, for both marginal inference and MAP inference, that FE-NBP and FE-GNN together cover a range of sample complexity regimes: FE-NBP achieves state-of-the-art performance on small datasets while FE-GNN achieves state-of-the-art performance on large datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge