Ensembling Shift Detectors: an Extensive Empirical Evaluation

Paper and Code

Jun 28, 2021

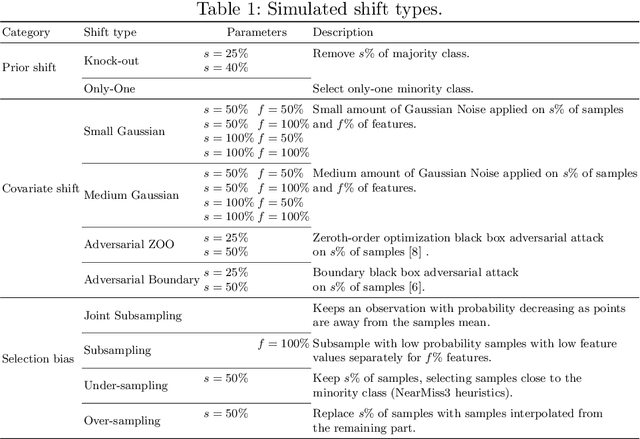

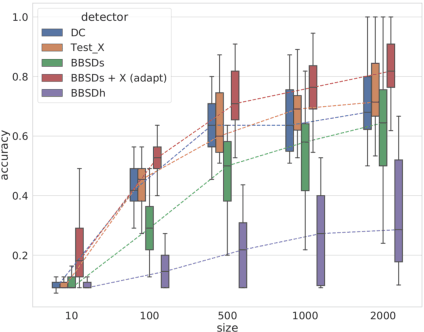

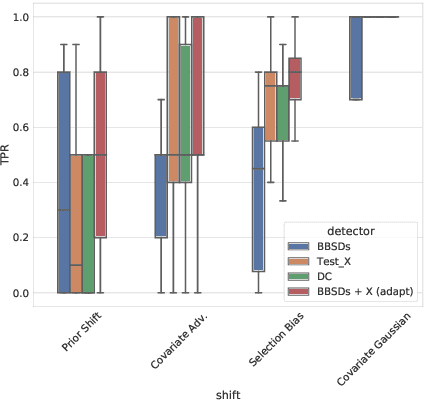

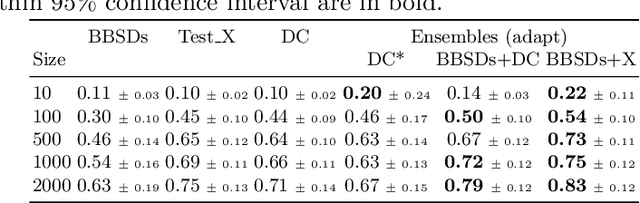

The term dataset shift refers to the situation where the data used to train a machine learning model is different from where the model operates. While several types of shifts naturally occur, existing shift detectors are usually designed to address only a specific type of shift. We propose a simple yet powerful technique to ensemble complementary shift detectors, while tuning the significance level of each detector's statistical test to the dataset. This enables a more robust shift detection, capable of addressing all different types of shift, which is essential in real-life settings where the precise shift type is often unknown. This approach is validated by a large-scale statistically sound benchmark study over various synthetic shifts applied to real-world structured datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge