Enhancing Multi-Robot Perception via Learned Data Association

Paper and Code

Jul 01, 2021

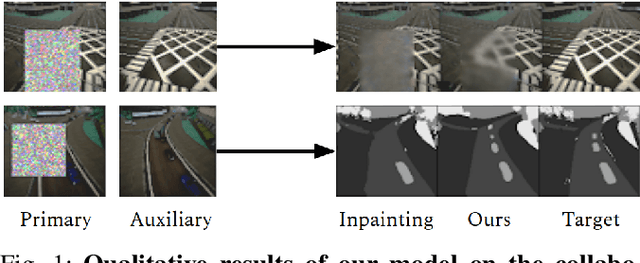

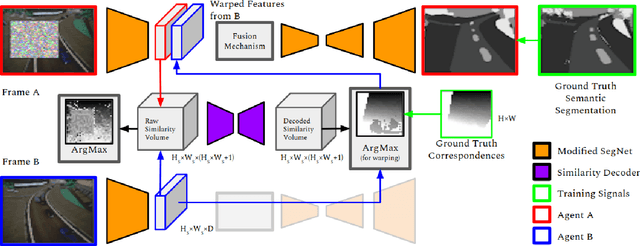

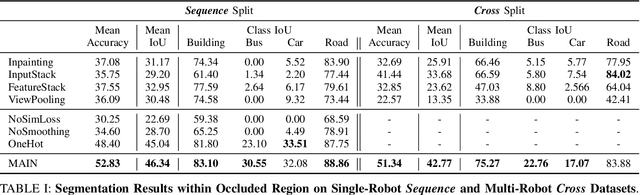

In this paper, we address the multi-robot collaborative perception problem, specifically in the context of multi-view infilling for distributed semantic segmentation. This setting entails several real-world challenges, especially those relating to unregistered multi-agent image data. Solutions must effectively leverage multiple, non-static, and intermittently-overlapping RGB perspectives. To this end, we propose the Multi-Agent Infilling Network: an extensible neural architecture that can be deployed (in a distributed manner) to each agent in a robotic swarm. Specifically, each robot is in charge of locally encoding and decoding visual information, and an extensible neural mechanism allows for an uncertainty-aware and context-based exchange of intermediate features. We demonstrate improved performance on a realistic multi-robot AirSim dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge