Enhancing Battery Storage Energy Arbitrage with Deep Reinforcement Learning and Time-Series Forecasting

Paper and Code

Oct 25, 2024

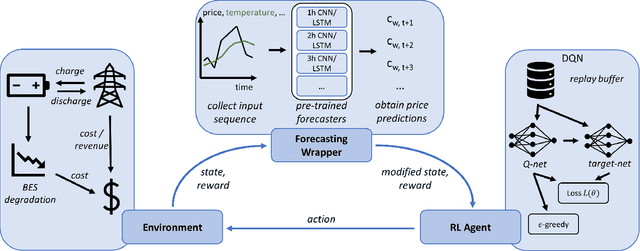

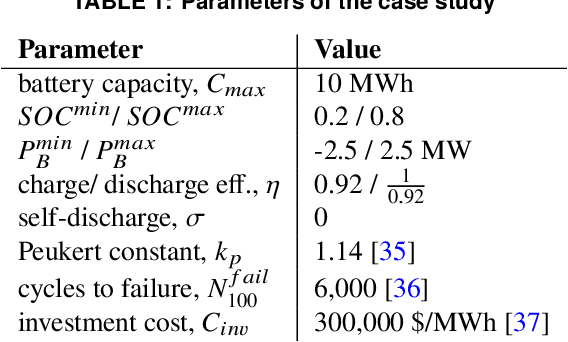

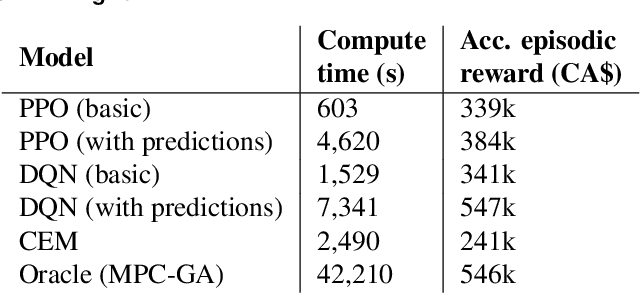

Energy arbitrage is one of the most profitable sources of income for battery operators, generating revenues by buying and selling electricity at different prices. Forecasting these revenues is challenging due to the inherent uncertainty of electricity prices. Deep reinforcement learning (DRL) emerged in recent years as a promising tool, able to cope with uncertainty by training on large quantities of historical data. However, without access to future electricity prices, DRL agents can only react to the currently observed price and not learn to plan battery dispatch. Therefore, in this study, we combine DRL with time-series forecasting methods from deep learning to enhance the performance on energy arbitrage. We conduct a case study using price data from Alberta, Canada that is characterized by irregular price spikes and highly non-stationary. This data is challenging to forecast even when state-of-the-art deep learning models consisting of convolutional layers, recurrent layers, and attention modules are deployed. Our results show that energy arbitrage with DRL-enabled battery control still significantly benefits from these imperfect predictions, but only if predictors for several horizons are combined. Grouping multiple predictions for the next 24-hour window, accumulated rewards increased by 60% for deep Q-networks (DQN) compared to the experiments without forecasts. We hypothesize that multiple predictors, despite their imperfections, convey useful information regarding the future development of electricity prices through a "majority vote" principle, enabling the DRL agent to learn more profitable control policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge