Enhancement of Novel View Synthesis Using Omnidirectional Image Completion

Paper and Code

Mar 18, 2022

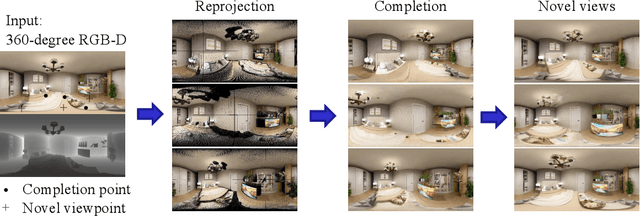

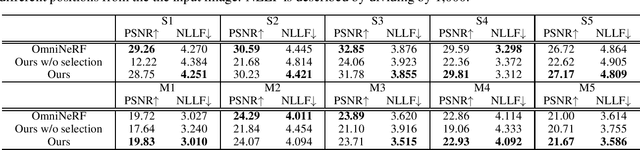

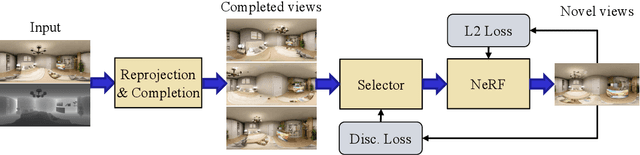

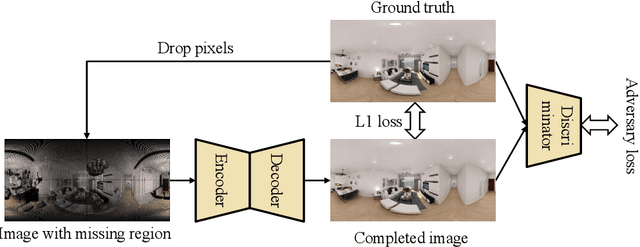

We present a method for synthesizing novel views from a single 360-degree image based on the neural radiance field (NeRF) . Prior studies rely on the neighborhood interpolation capability of multi-layer perceptrons to complete missing regions caused by occlusion and zooming, and this leads to artifacts. In the proposed method, the input image is reprojected to 360-degree images at other camera positions, the missing regions of the reprojected images are completed by a self-supervised trained generative model, and the completed images are utilized to train the NeRF. Because multiple completed images contain inconsistencies in 3D, we introduce a method to train NeRF while dynamically selecting a sparse set of completed images, to reduce the discrimination error of the synthesized views with real images. Experiments indicate that the proposed method can synthesize plausible novel views while preserving the features of the scene for both artificial and real-world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge