ENG: End-to-end Neural Geometry for Robust Depth and Pose Estimation using CNNs

Paper and Code

Nov 06, 2018

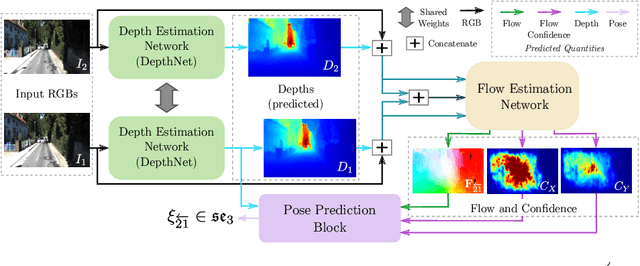

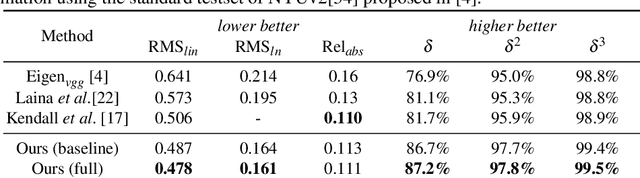

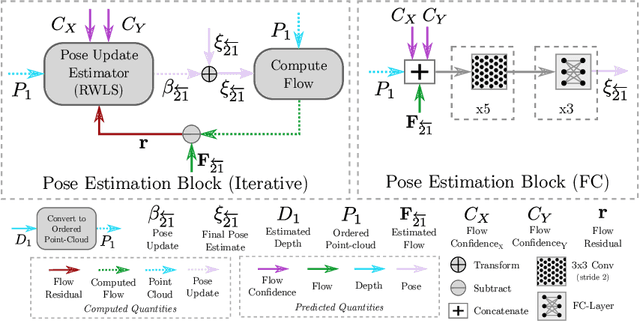

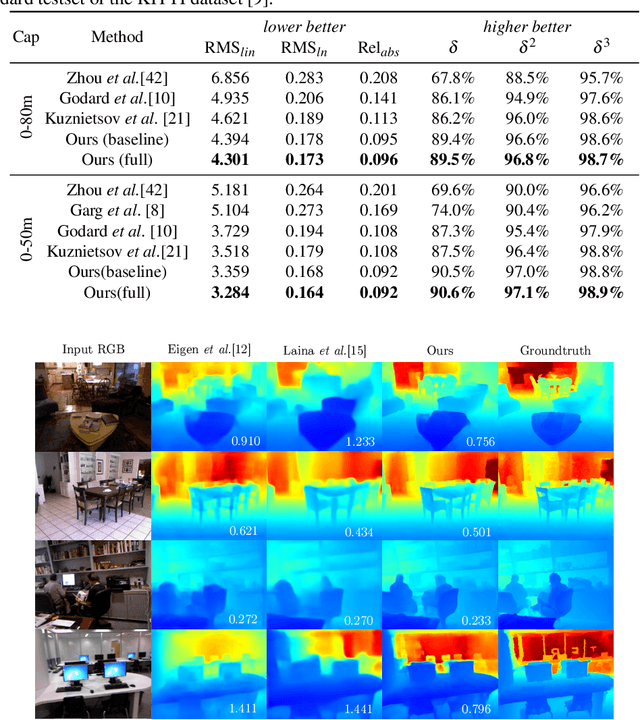

Recovering structure and motion parameters given a image pair or a sequence of images is a well studied problem in computer vision. This is often achieved by employing Structure from Motion (SfM) or Simultaneous Localization and Mapping (SLAM) algorithms based on the real-time requirements. Recently, with the advent of Convolutional Neural Networks (CNNs) researchers have explored the possibility of using machine learning techniques to reconstruct the 3D structure of a scene and jointly predict the camera pose. In this work, we present a framework that achieves state-of-the-art performance on single image depth prediction for both indoor and outdoor scenes. The depth prediction system is then extended to predict optical flow and ultimately the camera pose and trained end-to-end. Our motion estimation framework outperforms the previous motion prediction systems and we also demonstrate that the state-of-the-art metric depths can be further improved using the knowledge of pose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge