Energy-aware Scheduling of Jobs in Heterogeneous Cluster Systems Using Deep Reinforcement Learning

Paper and Code

Dec 11, 2019

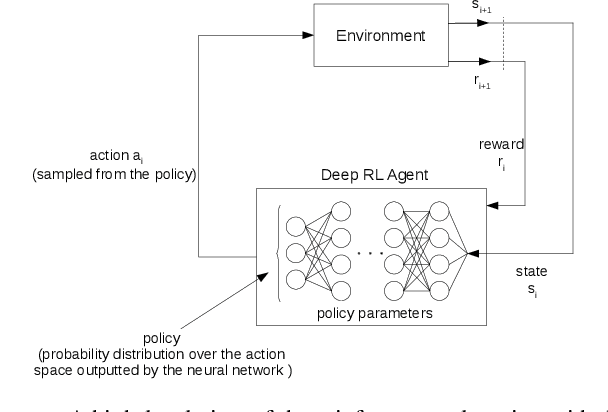

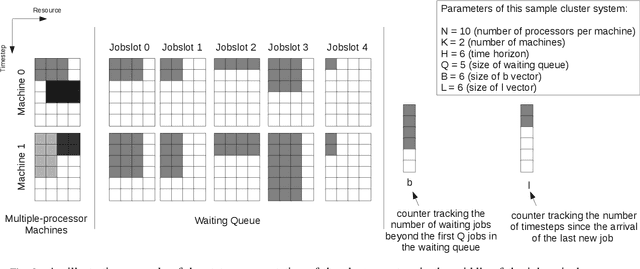

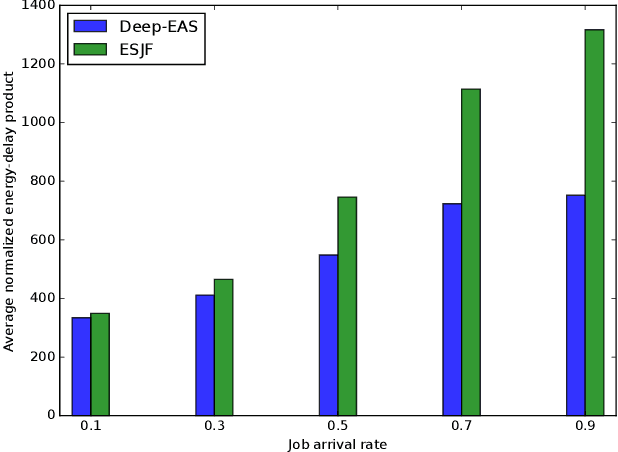

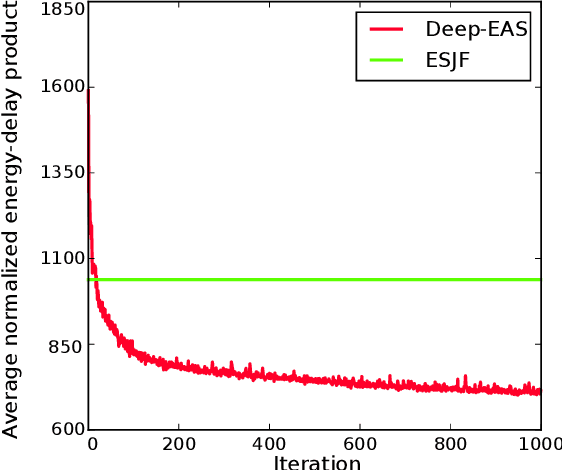

Energy consumption is one of the most critical concerns in designing computing devices, ranging from portable embedded systems to computer cluster systems. Furthermore, in the past decade, cluster systems have increasingly risen as popular platforms to run computing-intensive real-time applications in which the performance is of great importance. However, due to different characteristics of real-time workloads, developing general job scheduling solutions that efficiently address both energy consumption and performance in real-time cluster systems is a challenging problem. In this paper, inspired by recent advances in applying deep reinforcement learning for resource management problems, we present the Deep-EAS scheduler that learns efficient energy-aware scheduling strategies for workloads with different characteristics without initially knowing anything about the scheduling task at hand. Results show that Deep-EAS converges quickly, and performs better compared to standard manually-tuned heuristics, especially in heavy load conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge