End-to-end Weakly-supervised Multiple 3D Hand Mesh Reconstruction from Single Image

Paper and Code

Apr 18, 2022

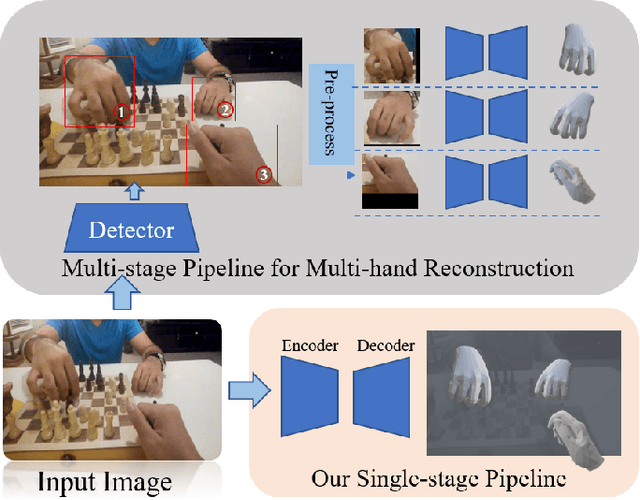

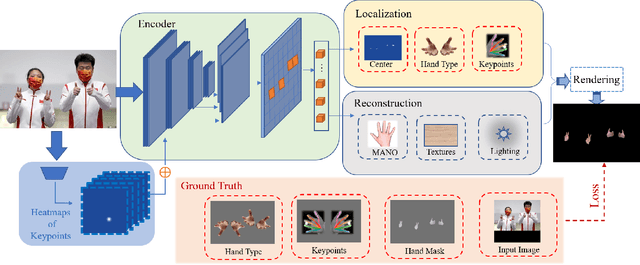

In this paper, we consider the challenging task of simultaneously locating and recovering multiple hands from single 2D image. Previous studies either focus on single hand reconstruction or solve this problem in a multi-stage way. Moreover, the conventional two-stage pipeline firstly detects hand areas, and then estimates 3D hand pose from each cropped patch. To reduce the computational redundancy in preprocessing and feature extraction, we propose a concise but efficient single-stage pipeline. Specifically, we design a multi-head auto-encoder structure for multi-hand reconstruction, where each head network shares the same feature map and outputs the hand center, pose and texture, respectively. Besides, we adopt a weakly-supervised scheme to alleviate the burden of expensive 3D real-world data annotations. To this end, we propose a series of losses optimized by a stage-wise training scheme, where a multi-hand dataset with 2D annotations is generated based on the publicly available single hand datasets. In order to further improve the accuracy of the weakly supervised model, we adopt several feature consistency constraints in both single and multiple hand settings. Specifically, the keypoints of each hand estimated from local features should be consistent with the re-projected points predicted from global features. Extensive experiments on public benchmarks including FreiHAND, HO3D, InterHand2.6M and RHD demonstrate that our method outperforms the state-of-the-art model-based methods in both weakly-supervised and fully-supervised manners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge