EILearn: Learning Incrementally Using Previous Knowledge Obtained From an Ensemble of Classifiers

Paper and Code

Feb 08, 2019

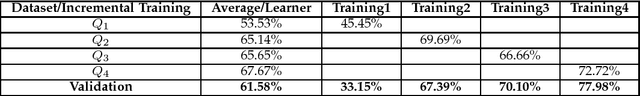

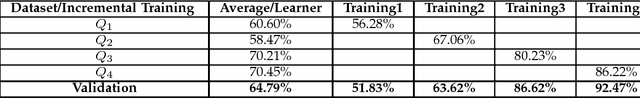

We propose an algorithm for incremental learning of classifiers. The proposed method enables an ensemble of classifiers to learn incrementally by accommodating new training data. We use an effective mechanism to overcome the stability-plasticity dilemma. In incremental learning, the general convention is to use only the knowledge acquired in the previous phase but not the previously seen data. We follow this convention by retaining the previously acquired knowledge which is relevant and using it along with the current data. The performance of each classifier is monitored to eliminate the poorly performing classifiers in the subsequent phases. Experimental results show that the proposed approach outperforms the existing incremental learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge