Efficient Decomposed Learning for Structured Prediction

Paper and Code

Jun 18, 2012

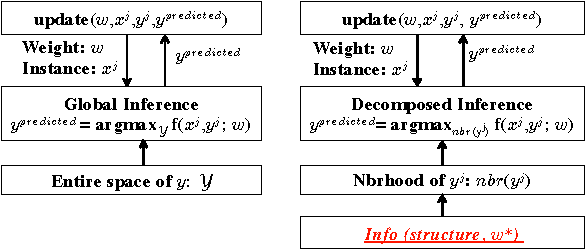

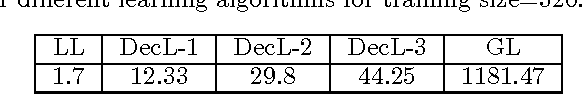

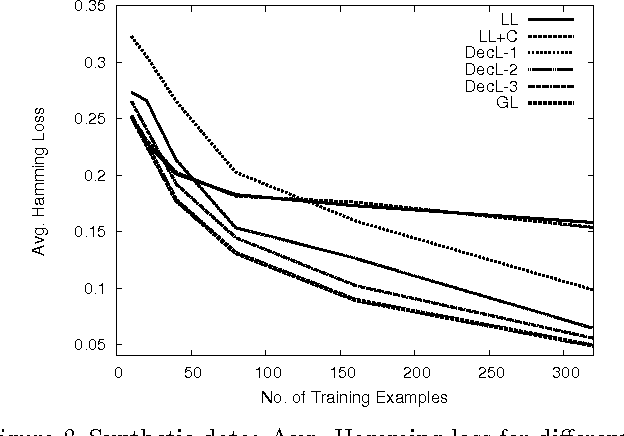

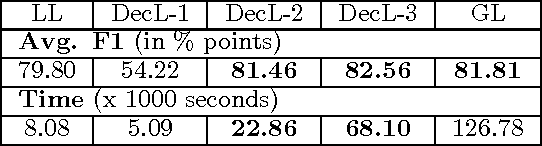

Structured prediction is the cornerstone of several machine learning applications. Unfortunately, in structured prediction settings with expressive inter-variable interactions, exact inference-based learning algorithms, e.g. Structural SVM, are often intractable. We present a new way, Decomposed Learning (DecL), which performs efficient learning by restricting the inference step to a limited part of the structured spaces. We provide characterizations based on the structure, target parameters, and gold labels, under which DecL is equivalent to exact learning. We then show that in real world settings, where our theoretical assumptions may not completely hold, DecL-based algorithms are significantly more efficient and as accurate as exact learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge