Effective Out-of-Distribution Detection in Classifier Based on PEDCC-Loss

Paper and Code

Apr 10, 2022

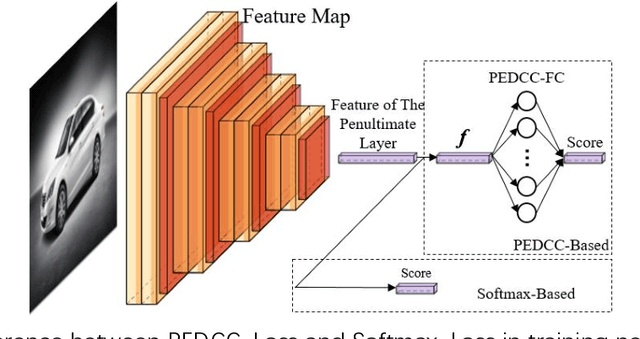

Deep neural networks suffer from the overconfidence issue in the open world, meaning that classifiers could yield confident, incorrect predictions for out-of-distribution (OOD) samples. Thus, it is an urgent and challenging task to detect these samples drawn far away from training distribution based on the security considerations of artificial intelligence. Many current methods based on neural networks mainly rely on complex processing strategies, such as temperature scaling and input preprocessing, to obtain satisfactory results. In this paper, we propose an effective algorithm for detecting out-of-distribution examples utilizing PEDCC-Loss. We mathematically analyze the nature of the confidence score output by the PEDCC (Predefined Evenly-Distribution Class Centroids) classifier, and then construct a more effective scoring function to distinguish in-distribution (ID) and out-of-distribution. In this method, there is no need to preprocess the input samples and the computational burden of the algorithm is reduced. Experiments demonstrate that our method can achieve better OOD detection performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge