EEG based Continuous Speech Recognition using Transformers

Paper and Code

Dec 31, 2019

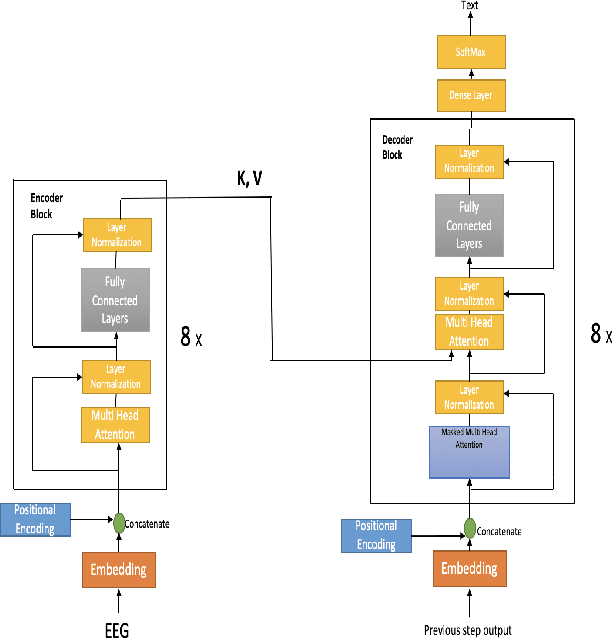

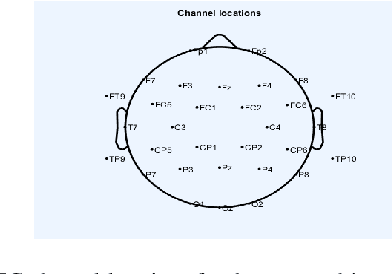

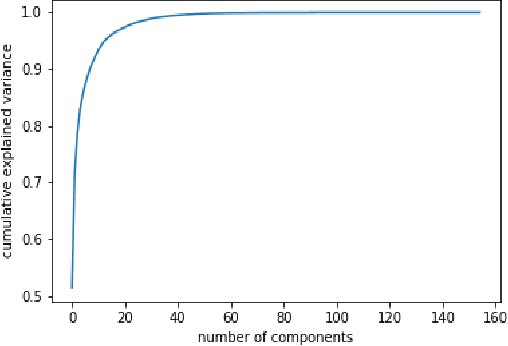

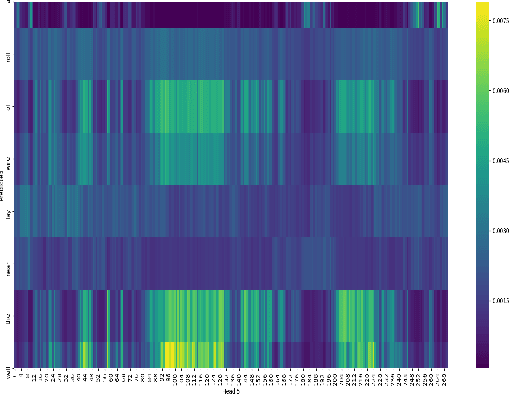

In this paper we investigate continuous speech recognition using electroencephalography (EEG) features using recently introduced end-to-end transformer based automatic speech recognition (ASR) model. Our results show that transformer based model demonstrate faster inference and training compared to recurrent neural network (RNN) based sequence-to-sequence EEG models but performance of the RNN based models were better than transformer based model during test time on a limited English vocabulary.

* Work in progress for submission to EUSIPCO 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge