ECAFormer: Low-light Image Enhancement using Cross Attention

Paper and Code

Jun 19, 2024

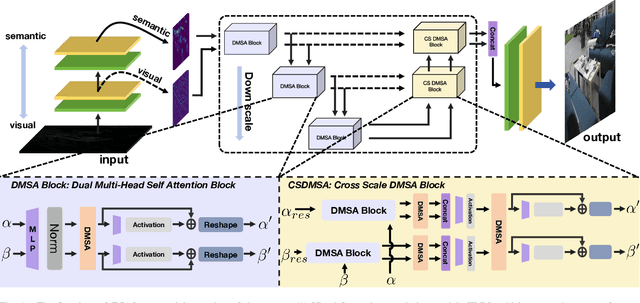

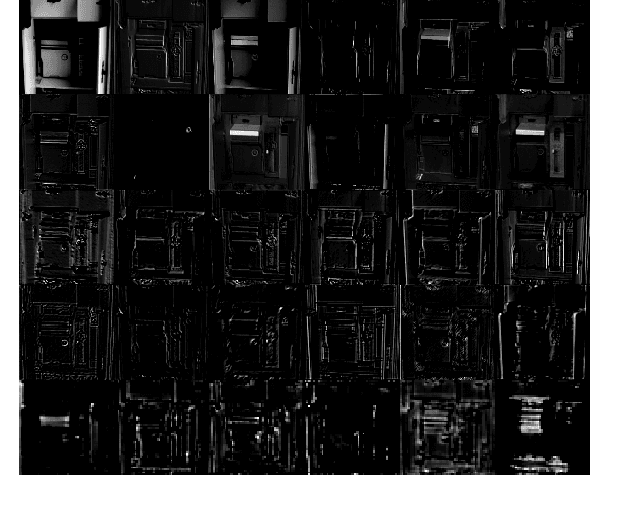

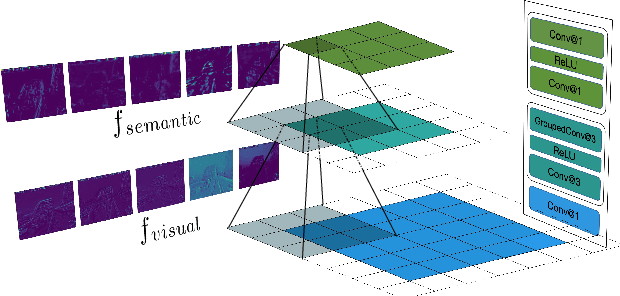

Low-light image enhancement (LLIE) is vital for autonomous driving. Despite the importance, existing LLIE methods often prioritize robustness in overall brightness adjustment, which can come at the expense of detail preservation. To overcome this limitation,we propose the Hierarchical Mutual Enhancement via Cross-Attention transformer (ECAFormer), a novel network that utilizes Dual Multi-head Self Attention (DMSA) to enhance both visual and semantic features across scales, significantly preserving details during the process. The cross-attention mechanism in ECAFormer not only improves upon traditional enhancement techniques but also excels in maintaining a balance between global brightness adjustment and local detail retention. Our extensive experimental validation on renowned low-illumination datasets, including SID and LOL, and additional tests on dark road scenarios. or performance over existing methods in terms of illumination enhancement and noise reduction, while also optimizing computational complexity and parameter count, further boosting SSIM and PSNR metrics. Our project is available at https://github.com/ruanyudi/ECAFormer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge