DynLight: Realize dynamic phase duration with multi-level traffic signal control

Paper and Code

Apr 20, 2022

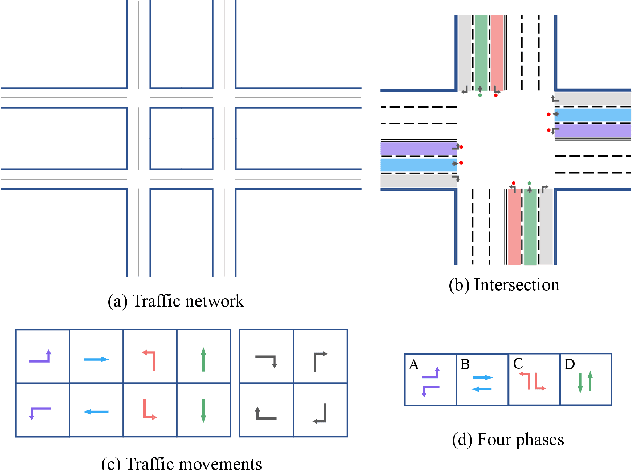

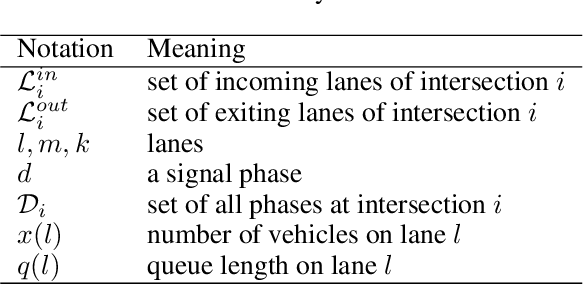

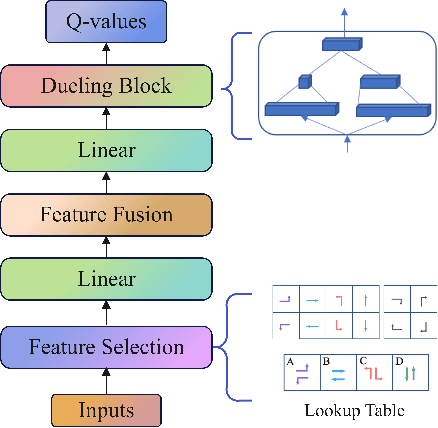

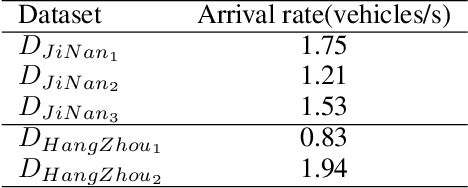

Adopting reinforcement learning (RL) for traffic signal control (TSC) is increasingly popular, and RL has become a promising solution for traffic signal control. However, several challenges still need to be overcome. Firstly, most RL methods use fixed action duration and select the green phase for the next state, which makes the phase duration less dynamic and flexible. Secondly, the phase sequence of RL methods can be arbitrary, affecting the real-world deployment which may require a cyclical phase structure. Lastly, the average travel time and throughput are not fair metrics to evaluate TSC performance. To address these challenges, we propose a multi-level traffic signal control framework, DynLight, which uses an optimization method Max-QueueLength (M-QL) to determine the phase and uses a deep Q-network to determine the duration of the corresponding phase. Based on DynLight, we further propose DynLight-C which adopts a well-trained deep Q-network of DynLight and replace M-QL with a cyclical control policy that actuates a set of phases in fixed cyclical order to realize cyclical phase structure. Comprehensive experiments on multiple real-world datasets demonstrate that DynLight achieves a new state-of-the-art. Furthermore, the deep Q-network of DynLight can learn well on determining the phase duration and DynLight-C demonstrates high performance for deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge