DynamicBEV: Leveraging Dynamic Queries and Temporal Context for 3D Object Detection

Paper and Code

Oct 07, 2023

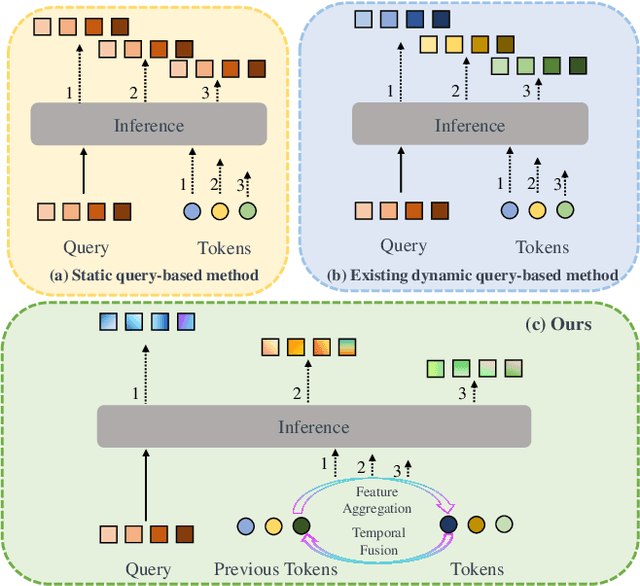

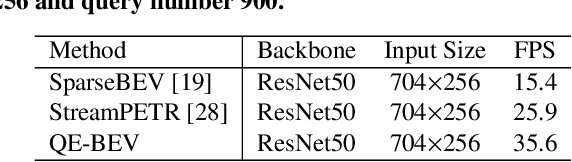

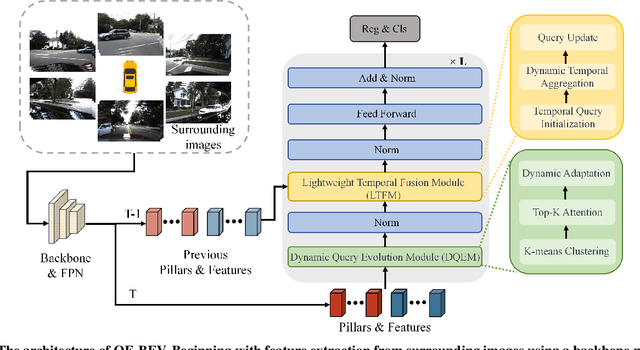

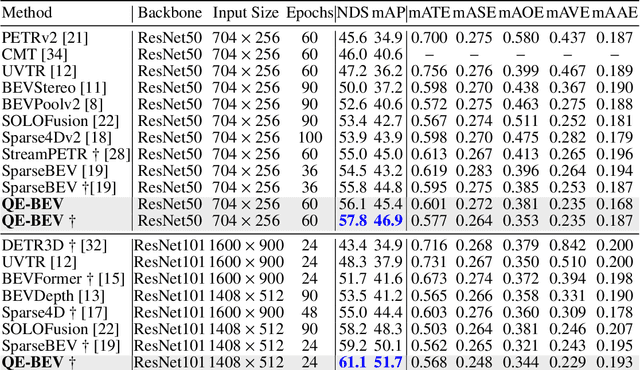

3D object detection is crucial for applications like autonomous driving and robotics. While query-based 3D object detection for BEV (Bird's Eye View) images has seen significant advancements, most existing methods follows the paradigm of static query. Such paradigm is incapable of adapting to complex spatial-temporal relationships in the scene. To solve this problem, we introduce a new paradigm in DynamicBEV, a novel approach that employs dynamic queries for BEV-based 3D object detection. In contrast to static queries, the proposed dynamic queries exploit K-means clustering and Top-K Attention in a creative way to aggregate information more effectively from both local and distant feature, which enable DynamicBEV to adapt iteratively to complex scenes. To further boost efficiency, DynamicBEV incorporates a Lightweight Temporal Fusion Module (LTFM), designed for efficient temporal context integration with a significant computation reduction. Additionally, a custom-designed Diversity Loss ensures a balanced feature representation across scenarios. Extensive experiments on the nuScenes dataset validate the effectiveness of DynamicBEV, establishing a new state-of-the-art and heralding a paradigm-level breakthrough in query-based BEV object detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge