Dynamic Motion Modelling for Legged Robots

Paper and Code

May 27, 2010

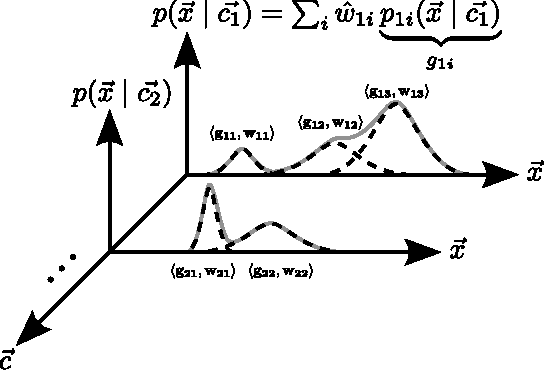

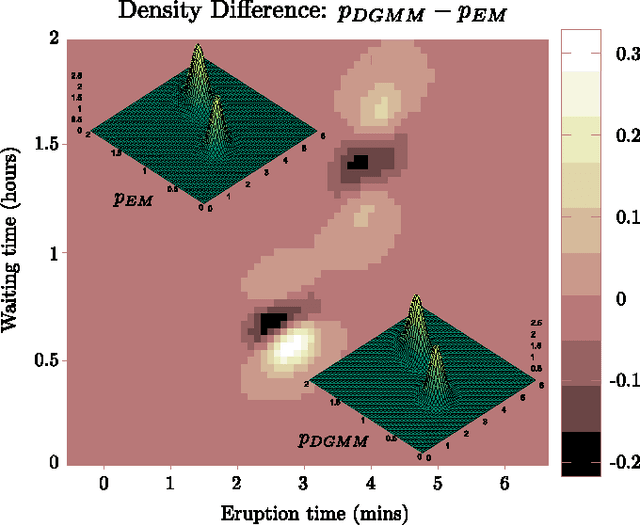

An accurate motion model is an important component in modern-day robotic systems, but building such a model for a complex system often requires an appreciable amount of manual effort. In this paper we present a motion model representation, the Dynamic Gaussian Mixture Model (DGMM), that alleviates the need to manually design the form of a motion model, and provides a direct means of incorporating auxiliary sensory data into the model. This representation and its accompanying algorithms are validated experimentally using an 8-legged kinematically complex robot, as well as a standard benchmark dataset. The presented method not only learns the robot's motion model, but also improves the model's accuracy by incorporating information about the terrain surrounding the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge