Dynamic Energy Dispatch in Isolated Microgrids Based on Deep Reinforcement Learning

Paper and Code

Feb 07, 2020

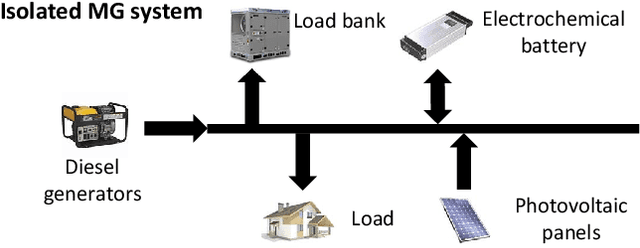

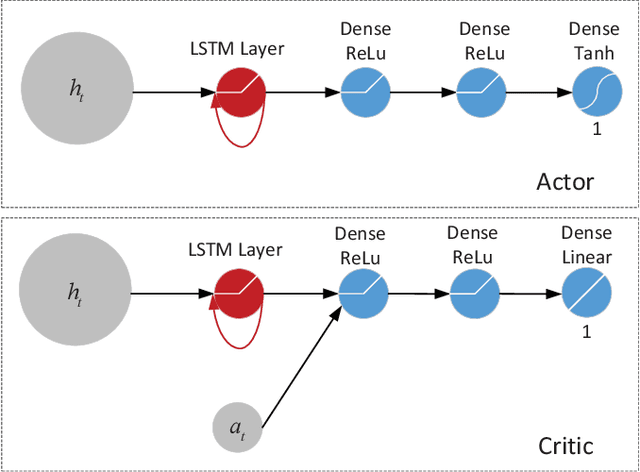

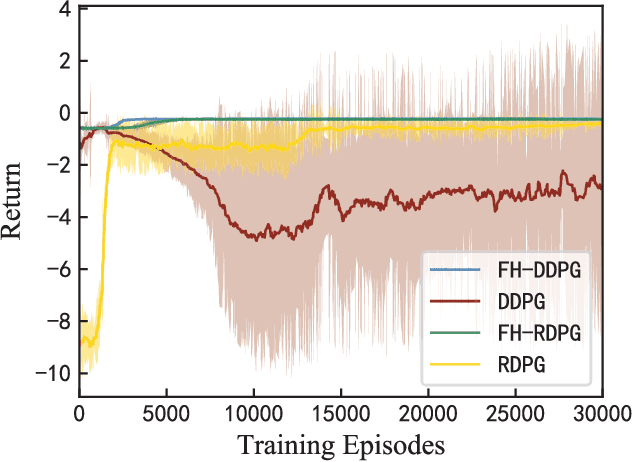

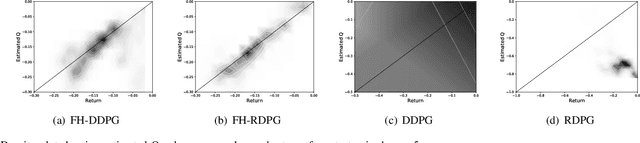

This paper focuses on deep reinforcement learning (DRL)-based energy dispatch for isolated microgrids (MGs) with diesel generators (DGs), photovoltaic (PV) panels, and a battery. A finite-horizon Partial Observable Markov Decision Process (POMDP) model is formulated and solved by learning from historical data to capture the uncertainty in future electricity consumption and renewable power generation. In order to deal with the instability problem of DRL algorithms and unique characteristics of finite-horizon models, two novel DRL algorithms, namely, FH-DDPG and FH-RDPG, are proposed to derive energy dispatch policies with and without fully observable state information. A case study using real isolated microgrid data is performed, where the performance of the proposed algorithms are compared with the myopic algorithm as well as other baseline DRL algorithms. Moreover, the impact of uncertainties on MG performance is decoupled into two levels and evaluated respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge