Dynamic Control of Explore/Exploit Trade-Off In Bayesian Optimization

Paper and Code

Jul 03, 2018

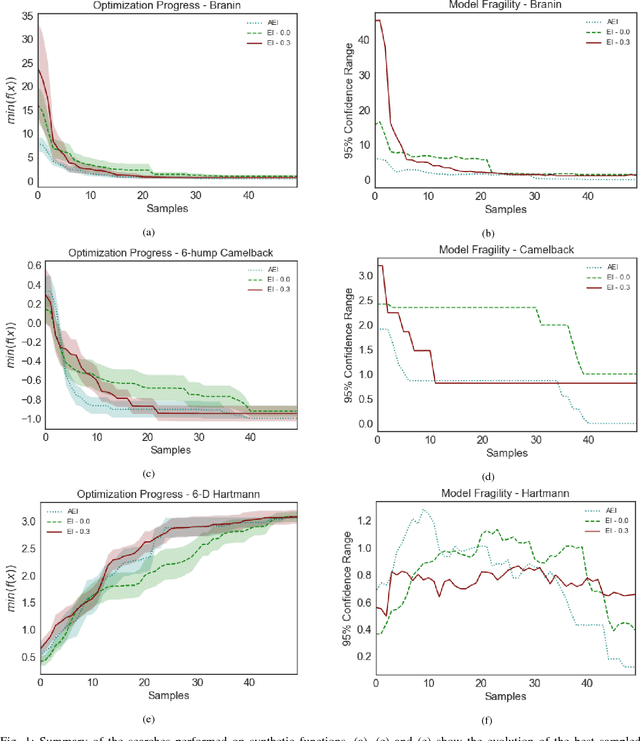

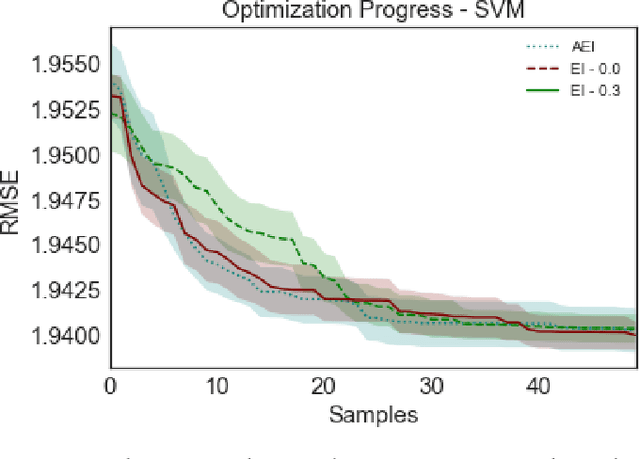

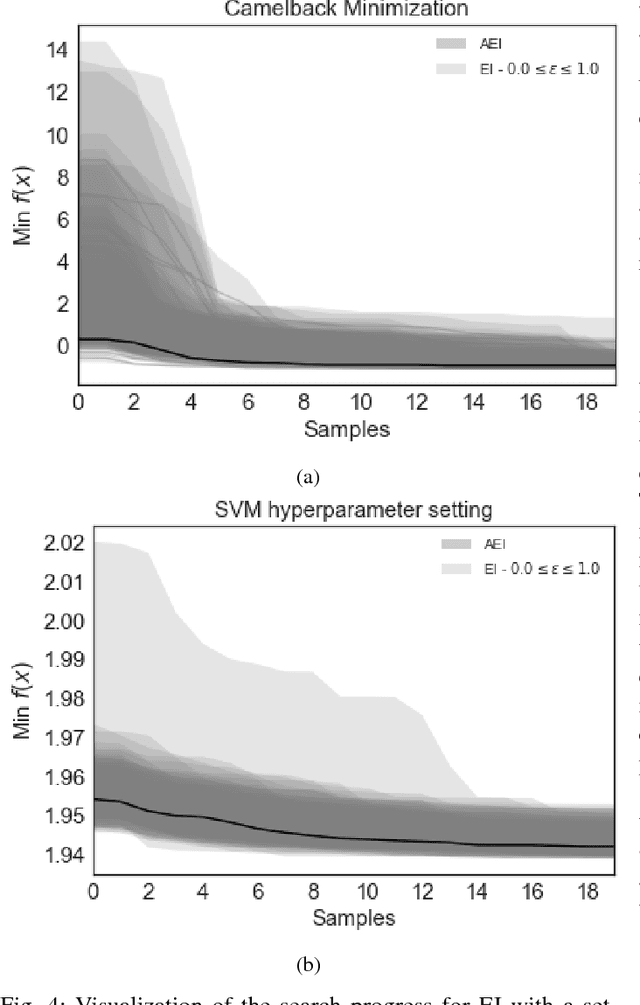

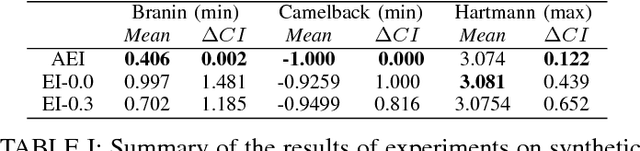

Bayesian optimization offers the possibility of optimizing black-box operations not accessible through traditional techniques. The success of Bayesian optimization methods such as Expected Improvement (EI) are significantly affected by the degree of trade-off between exploration and exploitation. Too much exploration can lead to inefficient optimization protocols, whilst too much exploitation leaves the protocol open to strong initial biases, and a high chance of getting stuck in a local minimum. Typically, a constant margin is used to control this trade-off, which results in yet another hyper-parameter to be optimized. We propose contextual improvement as a simple, yet effective heuristic to counter this - achieving a one-shot optimization strategy. Our proposed heuristic can be swiftly calculated and improves both the speed and robustness of discovery of optimal solutions. We demonstrate its effectiveness on both synthetic and real world problems and explore the unaccounted for uncertainty in the pre-determination of search hyperparameters controlling explore-exploit trade-off.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge