Dyadic Movement Synchrony Estimation Under Privacy-preserving Conditions

Paper and Code

Aug 01, 2022

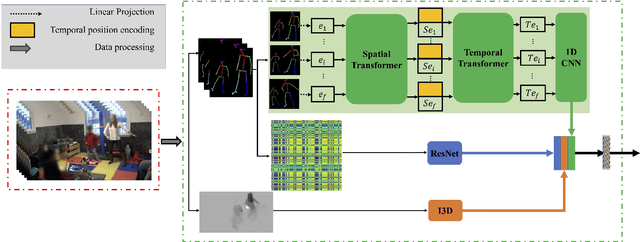

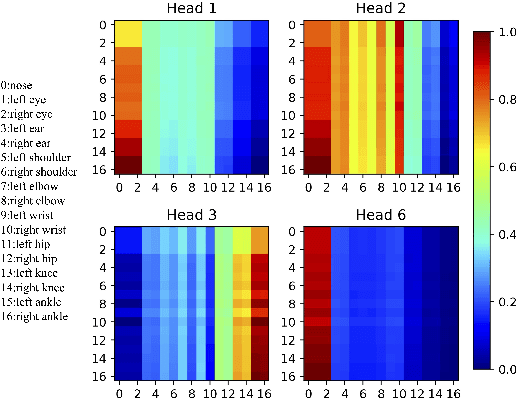

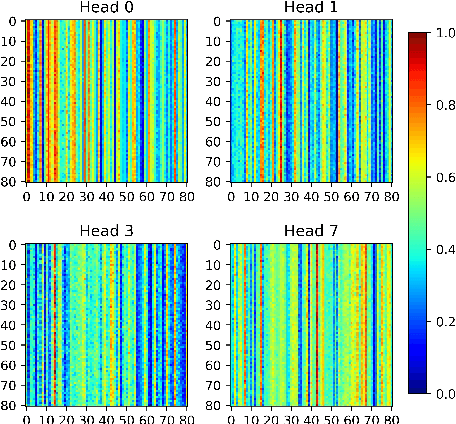

Movement synchrony refers to the dynamic temporal connection between the motions of interacting people. The applications of movement synchrony are wide and broad. For example, as a measure of coordination between teammates, synchrony scores are often reported in sports. The autism community also identifies movement synchrony as a key indicator of children's social and developmental achievements. In general, raw video recordings are often used for movement synchrony estimation, with the drawback that they may reveal people's identities. Furthermore, such privacy concern also hinders data sharing, one major roadblock to a fair comparison between different approaches in autism research. To address the issue, this paper proposes an ensemble method for movement synchrony estimation, one of the first deep-learning-based methods for automatic movement synchrony assessment under privacy-preserving conditions. Our method relies entirely on publicly shareable, identity-agnostic secondary data, such as skeleton data and optical flow. We validate our method on two datasets: (1) PT13 dataset collected from autism therapy interventions and (2) TASD-2 dataset collected from synchronized diving competitions. In this context, our method outperforms its counterpart approaches, both deep neural networks and alternatives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge