Duality-Based Stochastic Policy Optimization for Estimation with Unknown Noise Covariances

Paper and Code

Oct 26, 2022

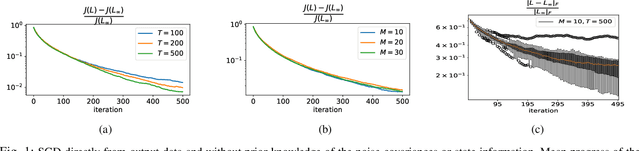

Duality of control and estimation allows mapping recent advances in data-guided control to the estimation setup. This paper formalizes and utilizes such a mapping by considering learning the optimal (steady-state) Kalman gain when process and measurement noise statistics are unknown. Specifically, building on the duality between synthesizing optimal control and estimation gains, the filter design problem is formalized as direct policy learning; subsequently, a Stochastic Gradient Descent (SGD) approach is adopted to learn the optimal filter gain. In this direction, control and estimation duality is also used to extend existing theoretical results for direct policy updates for Linear Quadratic Regulator (LQR) to establish convergence of the proposed algorithm-while addressing subtle differences between the two synthesis problems. The results are illustrated via several numerical examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge