Down-Sampling coupled to Elastic Kernel Machines for Efficient Recognition of Isolated Gestures

Paper and Code

Sep 17, 2014

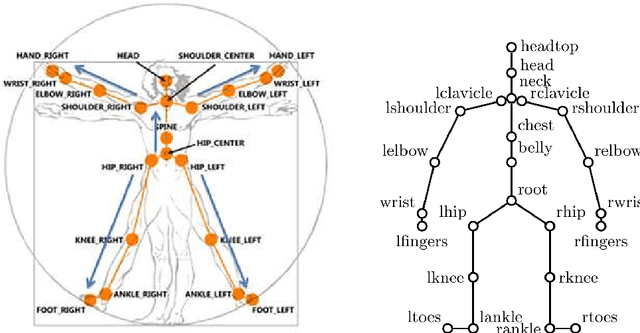

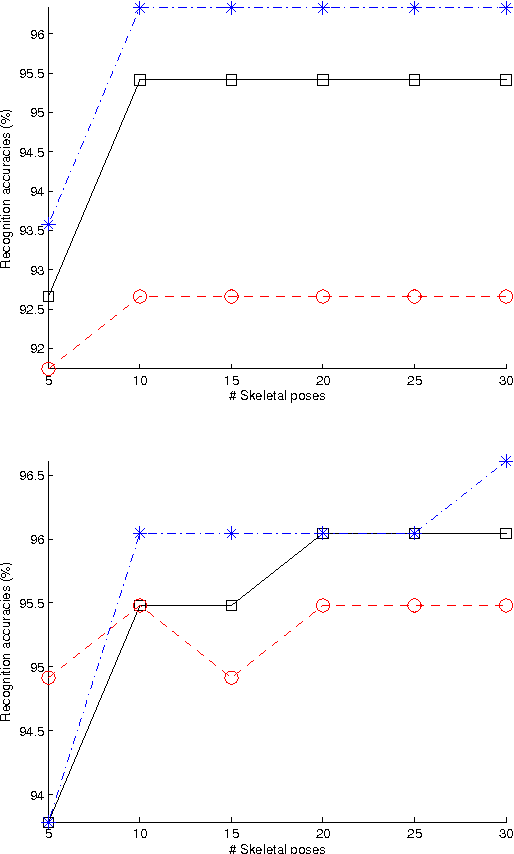

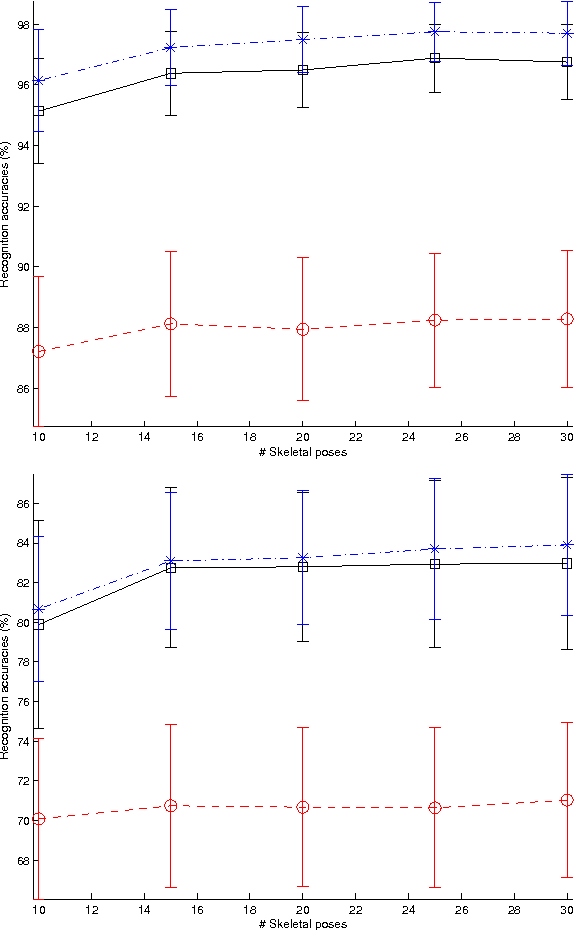

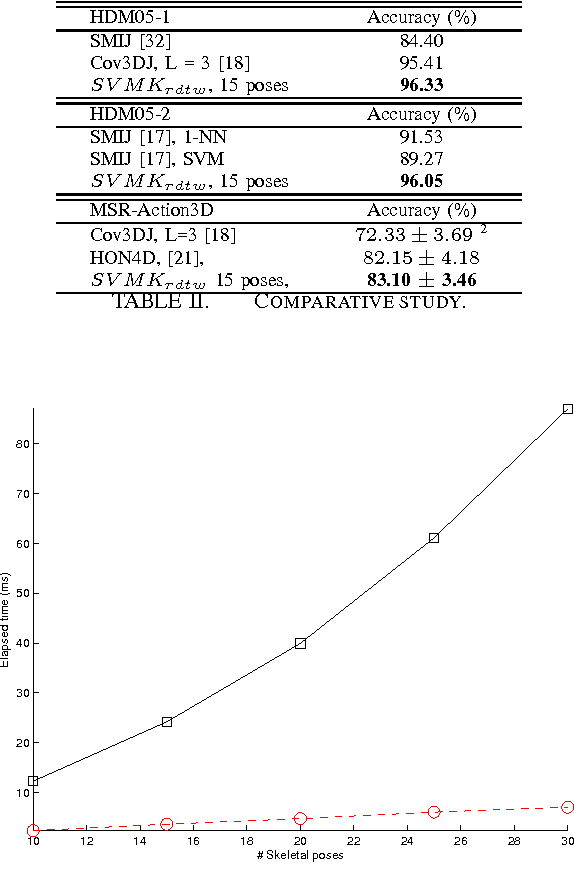

In the field of gestural action recognition, many studies have focused on dimensionality reduction along the spatial axis, to reduce both the variability of gestural sequences expressed in the reduced space, and the computational complexity of their processing. It is noticeable that very few of these methods have explicitly addressed the dimensionality reduction along the time axis. This is however a major issue with regard to the use of elastic distances characterized by a quadratic complexity. To partially fill this apparent gap, we present in this paper an approach based on temporal down-sampling associated to elastic kernel machine learning. We experimentally show, on two data sets that are widely referenced in the domain of human gesture recognition, and very different in terms of quality of motion capture, that it is possible to significantly reduce the number of skeleton frames while maintaining a good recognition rate. The method proves to give satisfactory results at a level currently reached by state-of-the-art methods on these data sets. The computational complexity reduction makes this approach eligible for real-time applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge